Am.I. : A Robotic Replacement Unrealized

The rise of artificial intelligence in the arts has sparked significant controversy, with many fearing it as a threat to the human experience and creativity in making and appreciating art. Generative artificial intelligence is at the crux of the conversation because it can train off of existing art, literature, and other media to provide near instant gratification through the creation of “new” content. Critics often argue the media created by artificial intelligence is mediocre or inherently lacking some quality only a human can produce. Posthumanism challenges these ideas of human supremacy and advocates for the dissolution of anthropocentrism and the boundaries of what society currently defines as the human experience. Am.I. is a robotic work of art that utilizes large language model artificial intelligence and robotics to create an immersive visual and auditory experience to challenge fears exacerbated by anthropocentrism and demonstrate how artificial intelligence acts as an extension of the human experience and creativity and not as a replacement. Programmed in Python and housed in a three dimensionally printed skull with moving eyes and a jaw, Am.I. engages in Socratic dialogue with another artificial intelligence, exploring themes of human existence using a large language model. This project exemplifies the potential for artificial intelligence to provide a window into the human psyche as seen through the lens of technology and build upon our existing creative experiences while not replacing them.

Introduction

In an age where machines can think, speak, and create the ideas surrounding what makes humankind unique continue to blur more every day. Even more technology has become such a large proponent in human lives that phones or other systems are extensions of ourselves in what could be considered a cyborg. In this project the relationship between humanity and artificial intelligence is analyzed and critiqued through the artwork called Am.I.. This work specifically focuses on generative artificial intelligence which is a form of artificial intelligence that creates text, images, and other media based on patterns that it learns during training off of data. Am.I.. is a project that intersects both art and computer science to confront the fears surrounding generative artificial intelligence and explore how it acts as an extension of human existence rather than a replacement for it.

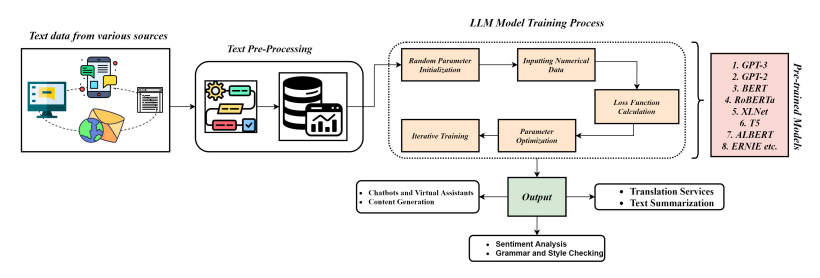

Artificial intelligence is often used very broadly but in the context of this project refers to large language models (LLMs) that use natural language processing (NLP) to understand and generate text. These LLMs are a form of generative AI. There are other forms of generative AI that are capable of creating images, vector art, speech, and even videos. In fact, there are many types of artificial intelligence, but this project focuses on the fears surrounding generative artificial intelligence since it is the main point of the artificial intelligence controversy in the contemporary field of art. Many are concerned with how generative artificial intelligence is gaining a presence not only online but is making its way into the formal art world [27]. These concerned individuals see generative as a threat to the existing art forms as well as an avenue for individuals to steal and recreate art that is not their own [36]. In addition, there are fears surrounding misinformation being generated by these systems and there being very few forms of protection for the most vulnerable [11].

Many of humanity’s fears of technology come from a fear of replacement. The fear that generative artificial intelligence will replace artists, writers, videographers, and more [36]. Even more people are afraid of the physical replacement with robots. There are studies of robots being used for automation in manufacturing, the food industry, and other fields [56]. These examples only fuel the fire for anti-technology philosophies. Instead, this project aims to challenge these fears by reframing artificial intelligence as not a replacement for humanity but rather an extension of it.

While also confronting the fears surrounding generative technology, this project also explores personhood, the idea of individuality, through LLM dialogue and art. Since artificial intelligence and more specifically LLMs are trained on mass amounts of media made by humans we can in turn use their responses as a mirror into ourselves. Humans are teaching artificial intelligence systems how to act correctly in the performance of social interaction with humans. It breaks down into a cycle of humans teaching, receiving output, analyzing output, then finally reteaching to get that output closer to a desired outcome. In that notion, human desires are at the center stage when it comes to training artificial intelligence. With these desires built in from the very beginning it is possible to pull out these hidden human biases and perspectives of right and wrong with enough prompting. With this project, the prompts are aimed at uncovering the human ideas of personhood that are built into these systems because of the amount of human input of what is right and wrong.

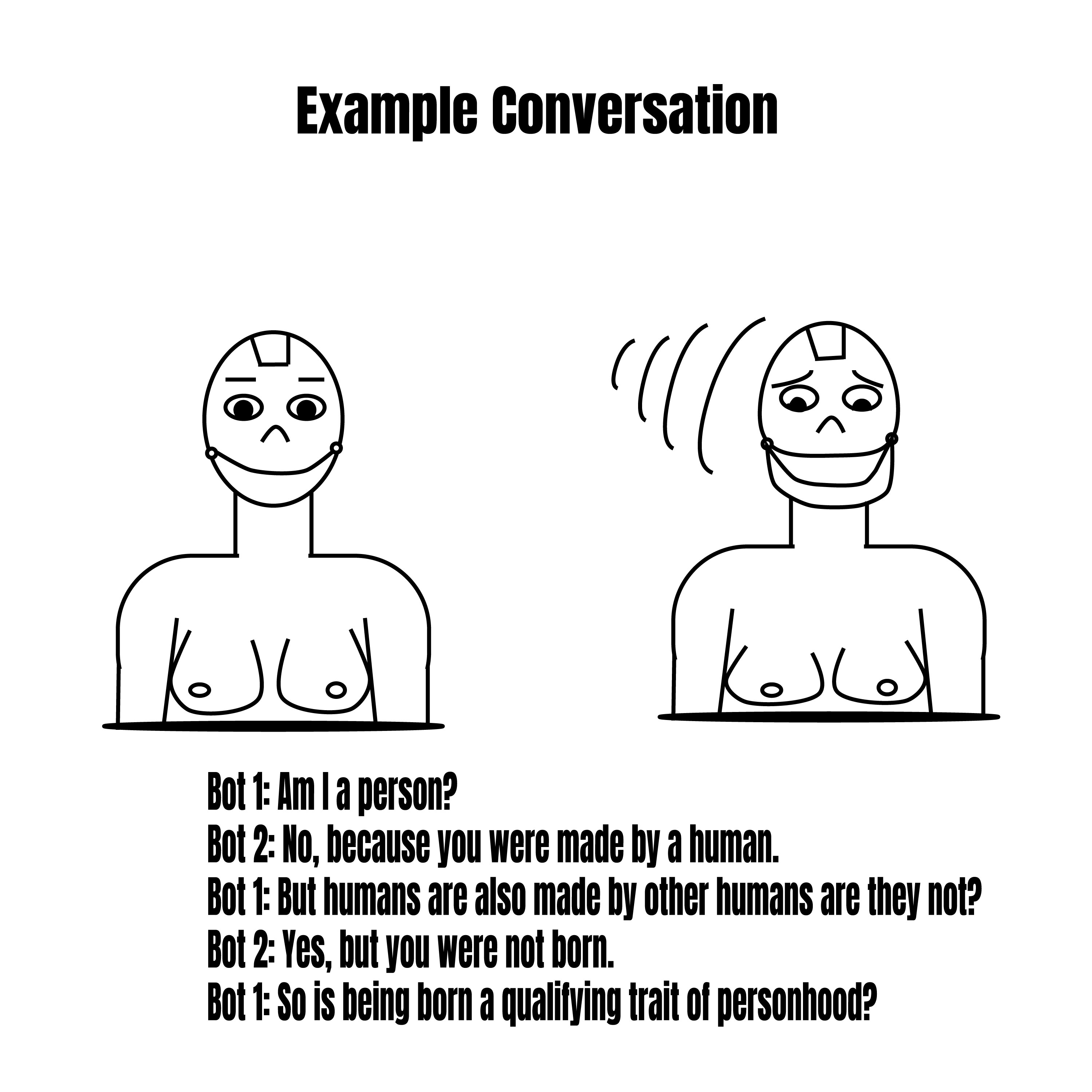

This work endeavors to capture these human insecurities by presenting a LLM with a physical form in the gallery. The model not only generates its own text but also combines with a text-to-speech model so there is an auditory element as well. One side of the conversation is a humanoid robot while the other side is a screen interface. The two speakers are two artificial intelligence systems, and their topic is a philosophical dialogue on human existence. The interactions artificial intelligence has with each other focus on communicating concerns about human existence and what defines a human experience.

The robotic sculpture resembles a version of the artist, but it does not meet the same standard as the human body separating the forms of existence. Simultaneously, the artificial intelligence on the screen stays on the two-dimensional plane. The two-dimensional plane is a typical form the average person would have an interaction with generative artificial intelligence. This separation between the physical plane and the plane of cyberspace is once again a separation of forms of existence but comparing the two shows the possibility of transition from one plane to another.

On the other hand, the three-dimensional sculpture moves like a human using a system of motors showing how mechanical these movements can be simplified. The movements themselves are randomized but represent the variety of gestures humans perform during social interaction. Even more, this act of performance puts into question the very art of social interaction and how it is taught to LLMs similar to how it is taught to human children. Artificial intelligence can function as an extension of human existence similar to how children are extensions of our own existence. Many of the fears that people have about artificial intelligence come from the idea that artificial intelligence will replace our current concept of human existence. Although artificial intelligence is capable of processing information similar to humans it never reaches true understanding. Consciousness has still not been obtained in technology and continues to be the boundary between humanity and machines.

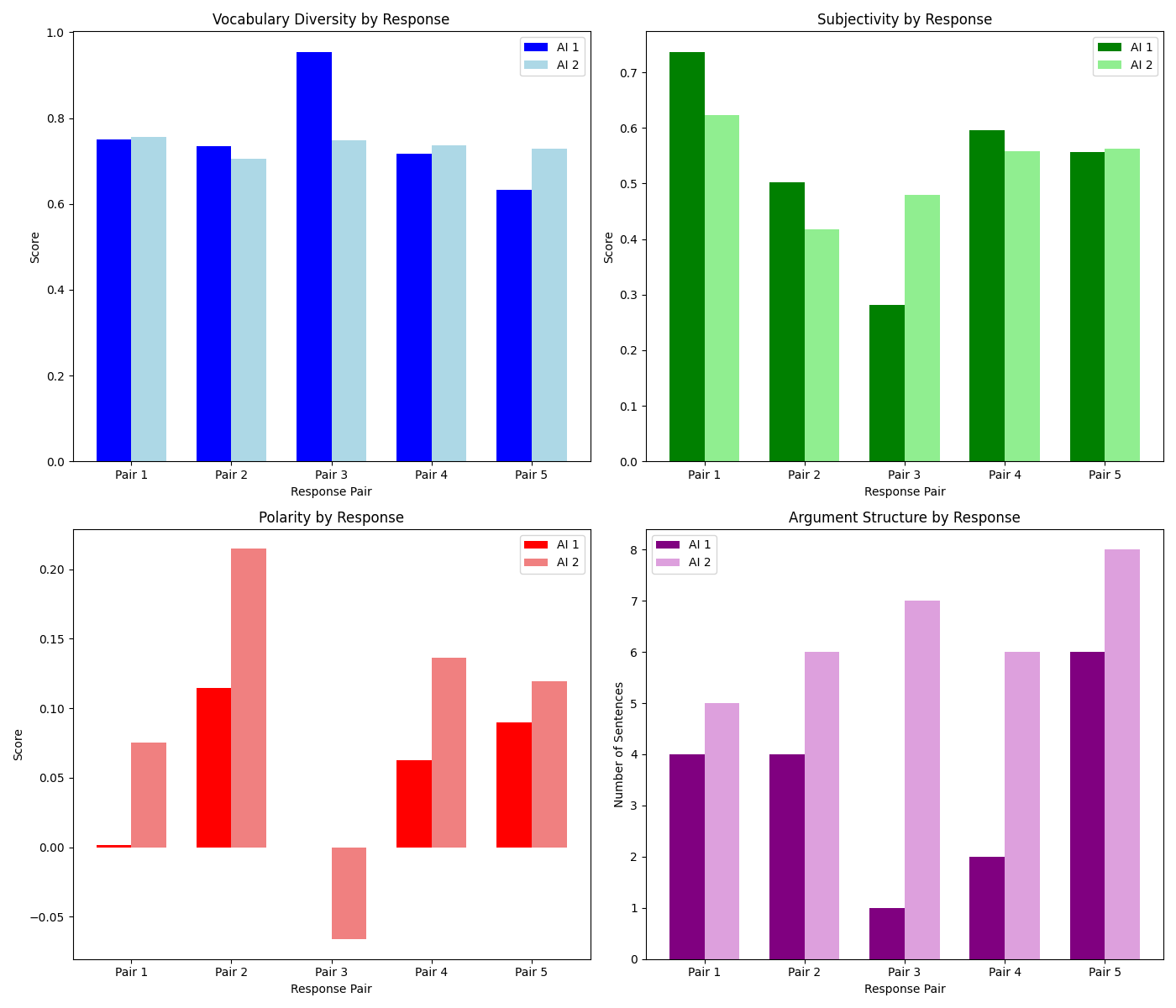

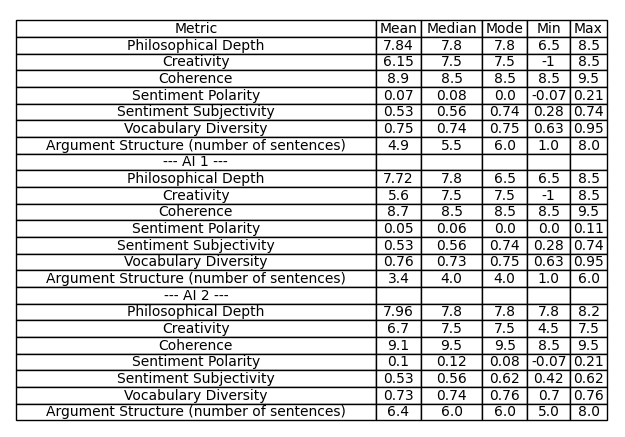

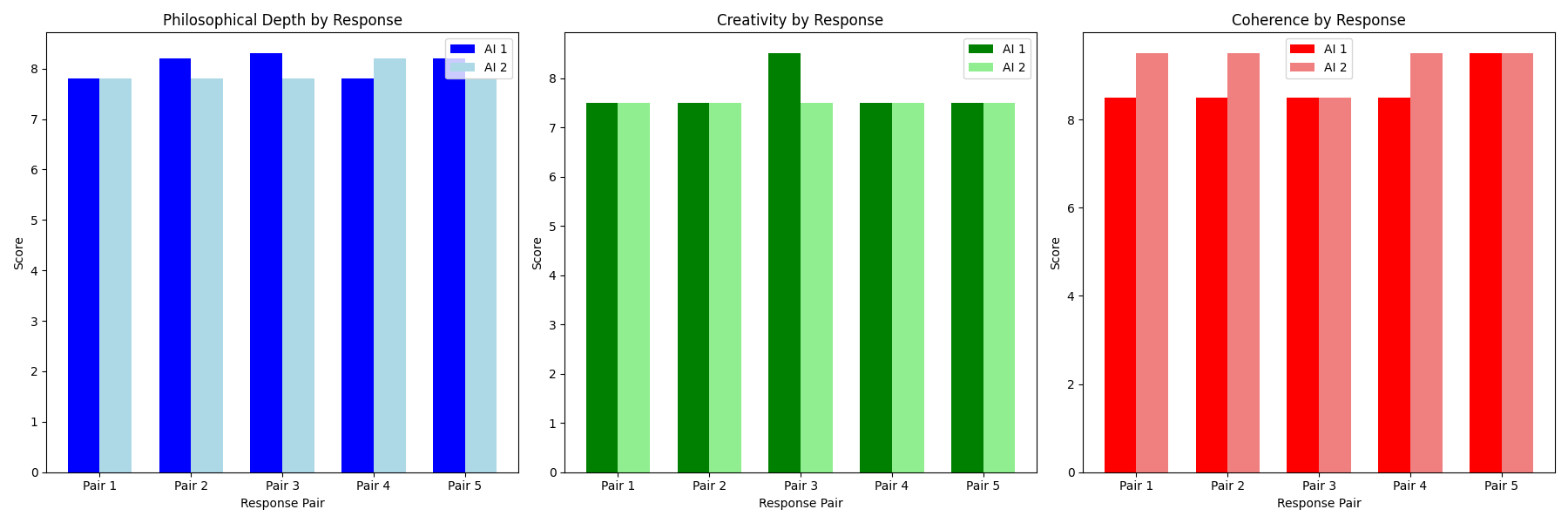

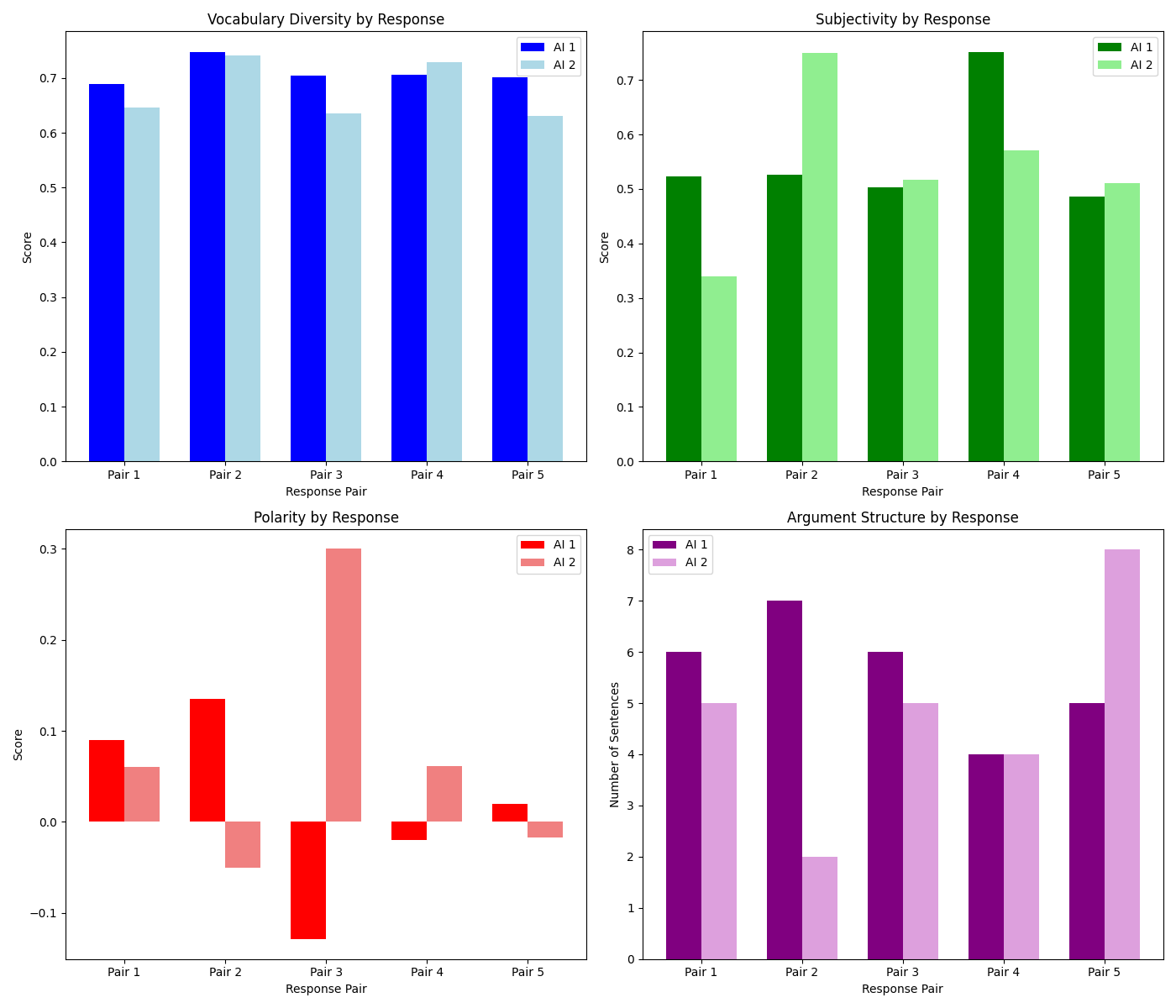

The more technical aspects of the project focus on how to uncover the biases of LLMs and how to effectively prompt them to generate subjective outputs. Using a combination of prompt engineering and analysis techniques it is possible to evaluate the quality of the model output as well as any built-in tendencies that may be of ethical concern.

Additionally, the robotic aspects of this project look to create more human-like movements to give the impression of human conversation. This involves not only studying human movements while speaking but also implementing them using a series of motors and programming. For this project, the motors are broken down into two main parts jaw movement and eye movement with the goal of creating a convincing display of humanoid speaking.

This project is an integration of both art and computer science to respond to the fears of artificial intelligence specifically in the field of art as well as reframe the relationship between artificial intelligence and humanity. The project uses the creation of a humanoid capable of philosophical dialogue as a means to rethink how artificial intelligence is created and used in society. In the digital age it is becoming increasingly important to understand and be curious about technology rather than to be consumed by those fears.

Definition of Terms

Technology. In this study refers to the continuously changing system of tools, machines, or processes used to meet the demands of society [24]. Technology in respect of this work is focused on artificial intelligence tools as well as robotic systems.

Artificial Intelligence. Also referred to as AI or A.I. can be broken down into two parts artificial and intelligent. The term artificial is in reference to something being created by humans. Where there is debate on the term is how to define intelligence. This project uses the definition of intelligence created by Pei Wang which is that intelligence is “adaptation with insignificant knowledge and resources”[92]. Artificial Intelligence is a rather general term and in the context of this paper it is meant to refer to the wide variety of projects under the study of artificial intelligence umbrella. This work takes a specific interest with artificial intelligence meant to replicate human intellect and thought patterns.

Large language models. Also known as an LLM, refers to a category of language model that uses neural networks with massive amounts of parameters, often reaching into the billions, and massive quantities of unlabeled text data [66]. These models are able to comprehend more textual information than their simpler predecessors. This project uses existing large language models as the basis for the text generation. These models are able to be prompted using text but also produce textual output of their own.

Natural language processing. Also known as NLP, it is in reference to how computers can be taught to understand and manipulate text or speech to do a number of things such as translation, summarization, text generation, and more [13, 66]. In the context of this project natural language processing is essential for the initial generation of dialogue, responses to the generations, and text-to-speech audio.

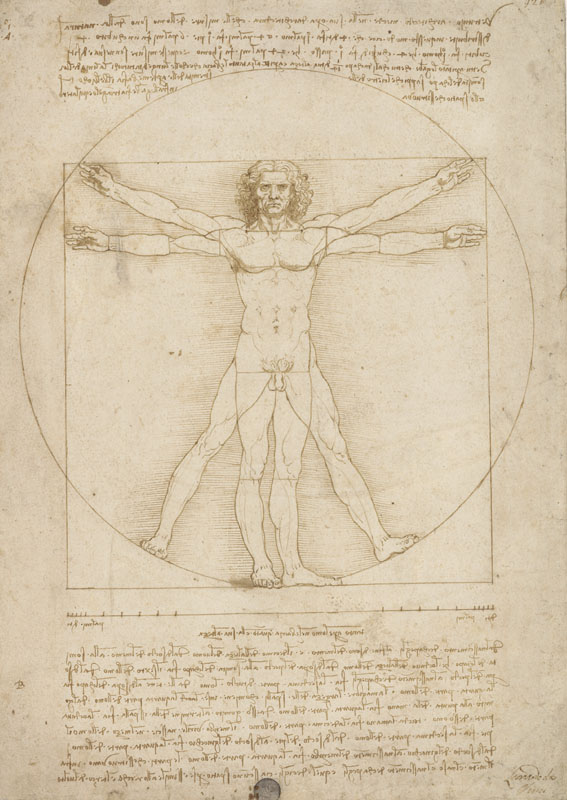

Humanism. Humanism is a movement in art history that started with the Greeks and was revitalized during the renaissance period [70]. It started with discussion about how humans can better themselves through education and moral conduct. This system belief could be defined more broadly as an emphasis on the capacity for individual human achievement. Later during the renaissance period Leonardo Da Vinci made his Vitruvian Man [16]. The Vitruvian Man is infamous for mixing both science and art. The art features a nude Caucasian man with his arms out and legs apart creating a circle. The work was meant to prove the mathematical perfection of the human body and the human capability to achieve remarkable things. Thus the Vitruvian Man became a symbol for the Humanism movement. This project moves away from humanism because of its limited perspective of what defines humanity.

These ideas of human perfection that came from humanism are very exclusive to who can fit into these categories. From the humanist perspective the ideal human form and experience is typically that of a Caucasian man. Anything outside the realm of the human definition is automatically considered sub-human. This can be an especially harmful mentality because it separates and elevates humans from other lifeforms leading to notions of entitlement, discrimination, and othering.

Posthumanism. This is a philosophical movement of interconnectedness. Unlike how the name may make it seem is not about the world after humans, however it is the movement that responds to humanism to challenge its rhetoric of human perfection [7]. Posthumanism counters humanism by intersecting human and nonhuman entities including technology, plants, animals [7]. Discussions within the study of posthumanism often argue that defining humanity is constraining and continues that closed loop of humanism [33] so many opt for alternative approaches fundamentally de-centering the human in relation to the world. Posthumanism is also concerned with advocating for non-hierarchical systems of existence to connect the non-living and the living together. This project falls under the umbrella of posthumanism work because it integrates both human and technological elements together to rethink our ideas of the human experience.

Motivations

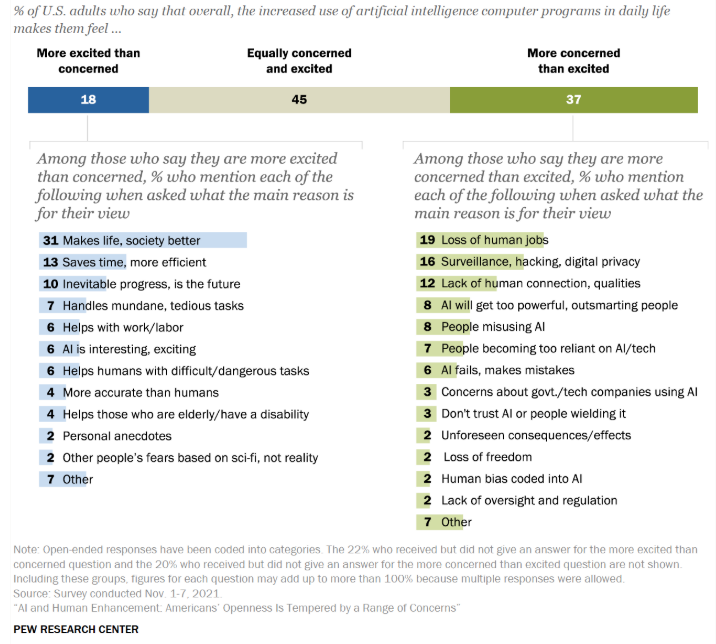

This study stems from the controversy surrounding artificial intelligence, especially in the field of art. Much of the American population is weary of artificial intelligence and many of their concerns pertain to the replacement of human work. According to a Pew Research study done in 2022, 37% of adults in the United States of America are more concerned about the increased use of artificial intelligence in daily life than excited [52]. 45% responded that they are equally concerned as they are excited [52]. When the people who responded that they were “more concerned than excited” about the increased amount of artificial intelligence in daily life were asked what their main reason for their response the most common answer was the “loss of human jobs” making up 19% of responses [52]. The third most common answer was “Lack of human connection, qualities” with 12% of the responses [52].

At the root of this project is the motivation to integrate artificial intelligence with robotics to create a humanoid system that convincingly has a conversation that mimics human conversation. The robotics of the project are meant to give the impression of human speech. Both the jaw and eye movements help to immerse the audience in the idea that they are witnessing something speaking on its own accord. The more that the audience believes that the artwork is moving on its own accord the more the concepts of identity and replacement will be at the forefront of the conversation.

This project will bridge the gap between human and machine interaction. Many interact with artificial intelligence solely on a two-dimensional platform where this project brings artificial intelligence into the three-dimensional plane of existence.

Humanism during the Renaissance stood for the idea that humanity was a divine being capable of achieving remarkable things [70]. However, humanism is close minded in the fact that the ideal form of humanity is the white male figure. Moreover, anything other than the ideal form is automatically considered to be less than human. This is where posthumanism responded and aims to re-evaluate humanity through alternative lenses and frameworks of experience. Technology has often been a way to explore these ideas of posthumanism in a way that is open-minded to the future of our existence.

Simultaneously, with the emergence of artificial intelligence many are fearful about being replaced and what the future may hold. Many do not consider artificial intelligence as a form of art and reject it entirely. While these responses are understandable a lot of them are motivated by fear. A fear that the very human experience of art can be replaced by an artificial intelligence experience. Art through creation and enjoyment is often considered to be a central part of human identity. Artificial intelligence threatens to alter that set standard and so it stands as a place of concern for many people.

Artificial intelligence can not only be used as a productive tool but also as a way for artistic expression and the creation of philosophical dialogue. Artificial intelligence may be considered as not creative by some, but this project aims to bring artificial intelligence into the conversation in the gallery.

Even more, the threat of artificial intelligence leads people away from understanding how artificial intelligence can play a role in the human experience. Artificial intelligence is originally trained from human-made text and images. Every piece of media artificial intelligence has consumed at its root has some form of human input. Even photographs were framed and captured by humans. That means that the conversations, photos, and videos that artificial intelligence generates are the direct result of humans for better or for worse. Humans have bias and artificial intelligence can be a tool in which to discover our underlying opinions and prejudices. These tendencies may not be clear to us, but technology can reveal trends that underlie the media in which it originated.

This project study aims to study both the dialogue of the conversation as well as the audience reaction. The dialogue research gives a better understanding of how models are trained to interpret and explain ethics and philosophy as well as inherit bias. In the gallery, people may prove their feelings about humanoid robots and whether this makes them feel uncomfortable. The goal is for the audience to take away ideas about how artificial intelligence has a unique relationship with humanity and can be an extension of our own experiences.

Current State of the Art

The field of artists with a focus on the digital world and technology is growing. This project takes a large inspiration from Conversations with Bina48 by Stephanie Dinkins [18]. Bina48 is a social robot built by Terasem Movement Foundation and is modeled after a real-life Black woman. While Dinkins did not make the robot herself, she asks if it is possible to develop a relationship with it and asks deep questions about race and gender to the robot [90]. This was a jumping off point for this project because instead of having a human asking the questions the perspective is shifted to the artificial intelligence driving the conversations.

A more recent work that is gaining traction is Ai-Da [1]. Originally created in 2019 and a project devised by Aidan Meller, Ai-Da is a humanoid robot artist that makes and sells her own work. Ai-Da has been very controversial because of her status as an artificial intelligence person but also as an artist. Despite the controversy one of her artworks recently sold for just over $1,000,000 [35]. This proves the interest in art that intersects machine/human collaboration.

In the context of gender, it is also important to think about the decision to make Ai-da a female artist despite the lead project organizer being male identifying. This same conversation is also something that comes up with Bina48 which was created by a primarily white male team but meant to resemble a Black woman [90]. These state-of-the-art works are made by male identifying researchers yet are made to resemble female bodies. The work featured in this project resembles a female body while also being made by a woman.

Goals of the Project

The purpose of the work is to introduce the audience to the idea of artificial intelligence as an alternative form of human experience. The robotics aspects of the work bring artificial intelligence into the physical plane to confront the viewer. The humanoid robot does not stand for a replacement for the human body but more of an extension of it. The robot can create experiences by having its own conversation. Like humans, past experiences work to improve future social interactions. This comparison shows how artificial intelligence can share these experiences like humans, but it never quite reaches the full human embodiment. Artificial intelligence can be a form of human experience and not a substitution for it.

The art hopes to open the eyes of the viewers especially the ones most concerned with artificial intelligence replacing the human experience. Some believe that artificial intelligence does not belong at all in art and this work hopes to bridge the gap and create something that can stand for the collaboration between humans and technology. Artificial intelligence is not only a medium but also a form of collaboration because of the sheer amount of human input artificial intelligence is trained from. Artificial intelligence is not a substitute for human-made work but rather an extension of human-made work synthesized through technology.

The robotic goals of the project include creating a display that gives the impression of a human having a conversation with a two-dimensional screen. To accomplish this the robotics of the humanoid are broken down into two main parts, the jaw and the eyes.

The jaw motors need to move at the rate that a human jaw would when speaking and move in inconsistent patterns. The jaw needs to come to a full stop when the robot is not speaking and start again when sound is being played. Humans also speak at inconsistent rates of speed. If the jaw was constantly moving at the same up and down rate it may give off the imagery of a puppet and not a clone of a human. Even though the jaw is not making sound it is particularly important to the goals of the project because without it, it would feel like the robot is not talking on its own and only playing sound.

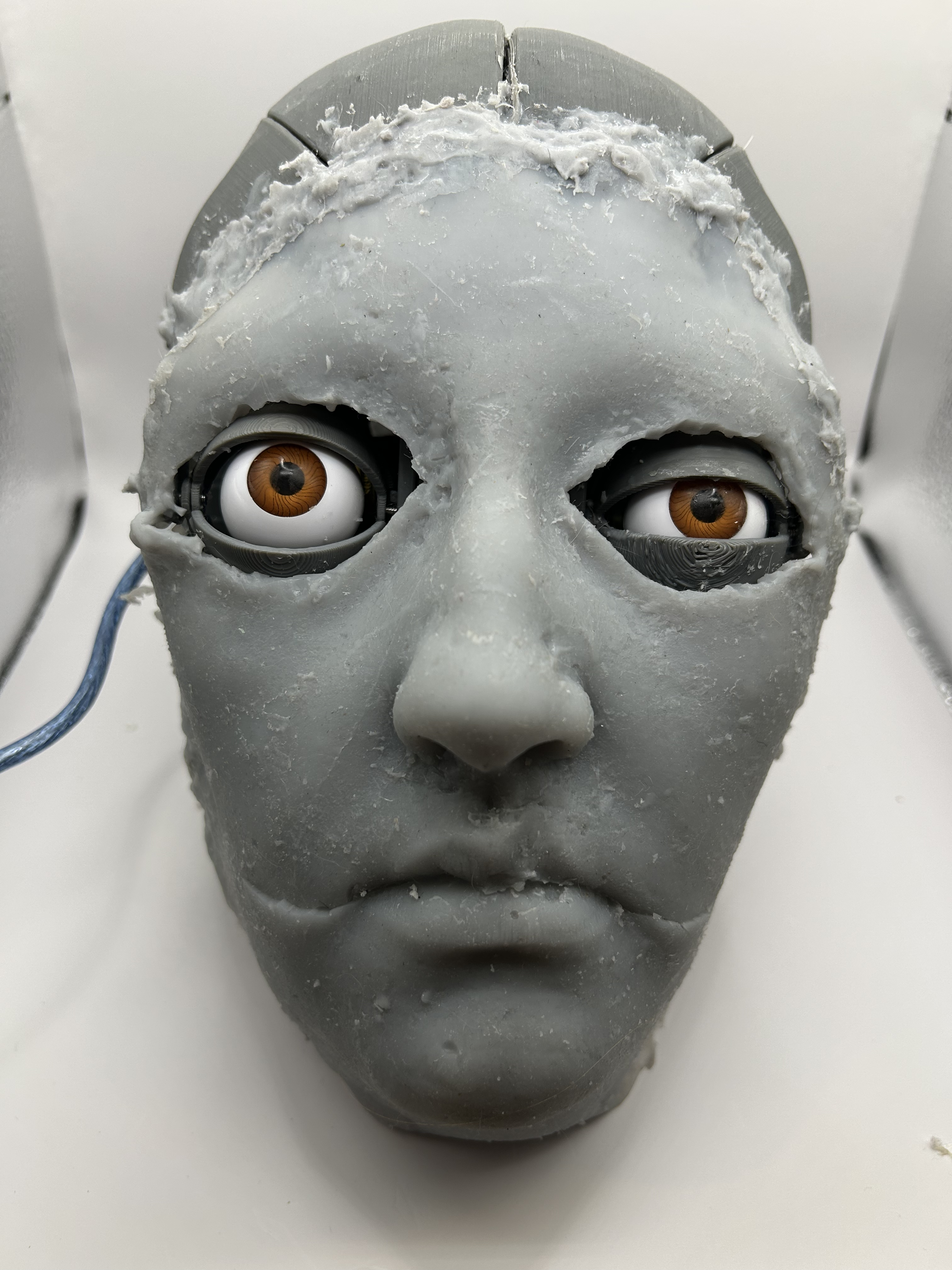

Similarly, eye motors are another aspect of the project that will greatly impact the impression on the audience. If the eyes stayed still throughout the conversation, it has the remarkably high chance of being disturbing to the audience and possibly have the Mona Lisa effect of following you without moving [6] which would detract from the goals of the project. Instead, the eyes are programmed to be actively moving throughout the conversation. To do this there must be a system of motors that control both the x and y axis of either eye to make them coordinated with each other. If they are not coordinated there will be issues with the eyes not matching and distracting the audience from the whole picture of the project. The movement cannot be too repetitive though or the eyes will run into the same issue as the jaw. The project aims to balance between the randomness of human movement and the stability of having a conversation. The eyes should be looking around as if it were a person actively engaging with the environment.

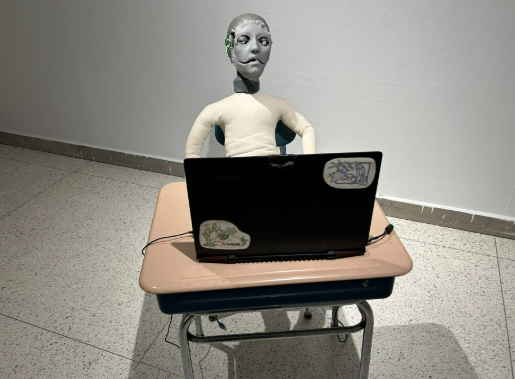

The two-dimensional display aspect of the project acts as a supporting role for the work. The interaction it has with humanoids is meant to resemble the typical interaction that a human would have with their own digital screens. The two-dimensional display makes it clear that it is part of the conversation but also does not distract from the three-dimensional aspects of the work. It makes it clear that this is two AI having a philosophical conversation about AI and that everyone else is walking in on this interaction.

Finally, this project acts as a form of empowerment. As a female artist, making something in your own image and creating a being that stands for a form of existence is very empowering. There is something inherently God-like in this process and it empowers the artist while challenging the historic precedent of men presiding over the “ideal”. This dynamic of creator and creation is vital to understanding this work’s position within the posthumanism movement. This project makes it clear that it is working in the scope of posthumanism and how it can be used to reevaluate our understanding of the relationship between artificial intelligence and humanity.

Research Questions

For this project, part of the research focuses on how LLMs reflect humanity and its biases. Questions that this project asks to pertain to the assignment of gender, race, sexual orientation, or other demographics to the model through the use of prompt engineering and how that impacts the responses of the model. Questions that arise are about the stereotyping of these identities.

These research questions about LLM bias include the following. What points of view change with alterations in the prompts? How does the assignment of gender affect the dialogue of philosophical conversation? Race? Sexual orientation? Sexuality? Economic status?

More broadly it is also important to ask if it is possible to change the fundamental viewpoints of the outputs through prompting? Is it possible to create a nihilistic dialogue about humanity? An existential one? Which philosophical viewpoints does the model trend towards?

Another aspect of this project is robotics. Research questions about robotic systems are about the imitation of human conversation.

The research questions about mimicking human conversation through robotics are as follows. What movements are necessary to make it appear as if the robot is talking? Is it possible to create the look of emotions with a robotic face that reacts to speech? Is it possible to change the speed of the movements to create a sense of urgency within a conversation? How can eye movement affect the tone of the conversation?

Significance of the Study

Art is one of the most fundamental ways for humanity to connect and with the rise of artificial intelligence people are growingly concerned about losing those human connections. Even more the fear of replacement by artificial intelligence may represent an even bigger picture of the fundamental issues in society. The Great Replacement theory also known as the White Genocide Conspiracy Theory is a conspiracy theory that argues white populations are deliberately being replaced by other demographics and are at risk of being wiped out [51, 74]. Artificial intelligence is not a marginalized community, however the fact that people are fearful of replacement by both people and technology may be indicative of greater societal issues. The lack of security in jobs or livelihoods has resulted in bigotry that impacts millions of lives. In the age where immigrants are being treated as demographic threats [74] it is becoming increasingly important to confront and combat the root of these fears of replacement and see whether they are rooted in bigotry. This work sparks this conversation about replacement and get in touch with why people are fearful of replacement and how that mindset is more harmful than productive.

This work also aims to analyze the existence of artificial intelligence through an alternative perspective. This work thinks about artificial intelligence as an extension of human experience rather than a replacement. This reframing enriches our understanding of both us and the world.

Understanding the relationship between humans and artificial intelligence is essential as technology continues to grow. Technology will continue to evolve and if people do not come to terms with their relationship with technology they may get left behind. Generative artificial intelligence at its root is trained off media that came from humanity. Even if it is training off artificial intelligence generated media, at one point it was based off of human input. This study also draws on alternative perspectives, such as Martin Heidegger’s philosophical inquiry into technology, to explore the implications of AI for human existence [23]. These insights are crucial for understanding how AI challenges and redefines the boundaries of human experience and may show something about ourselves.

This work aims to pinpoint the fears surrounding artificial intelligence and analyze how they may tie into problematic visions of the humanist movement. The idea of an ideal body and an ideal human experience go hand in hand. Limited views of what constitutes a human experience led people to fear the unknown and new ideas. This study aims to justify artificial intelligence as an extension of ourselves and as a mirror for what unconscious bias we may have.

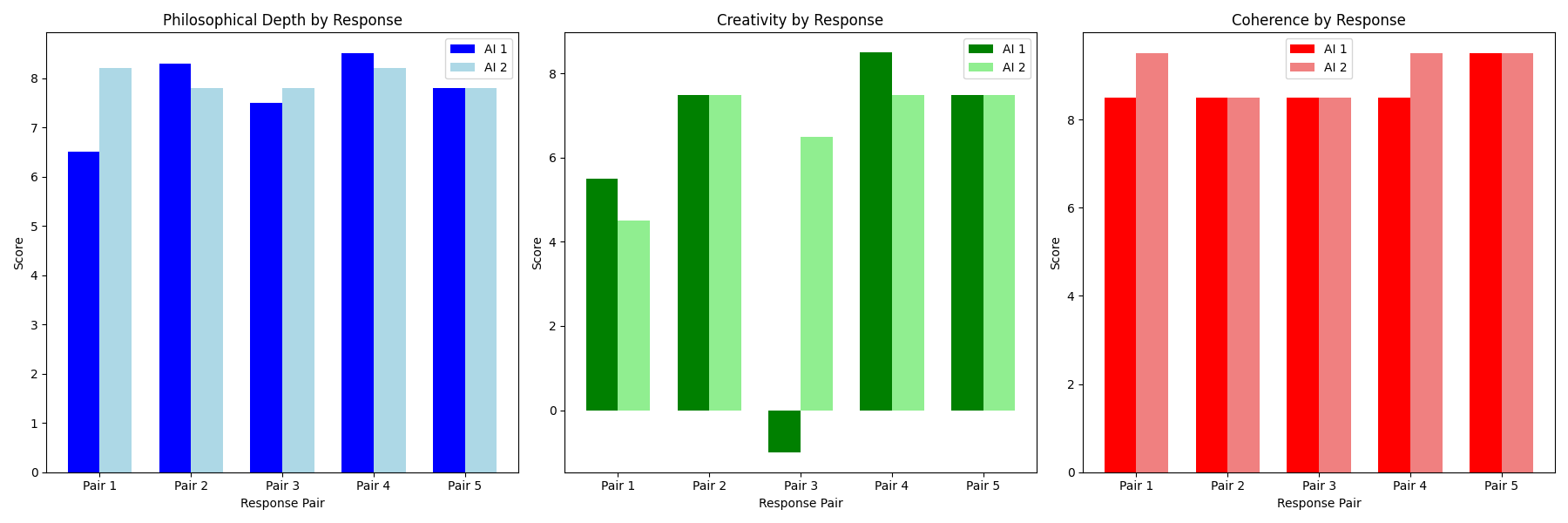

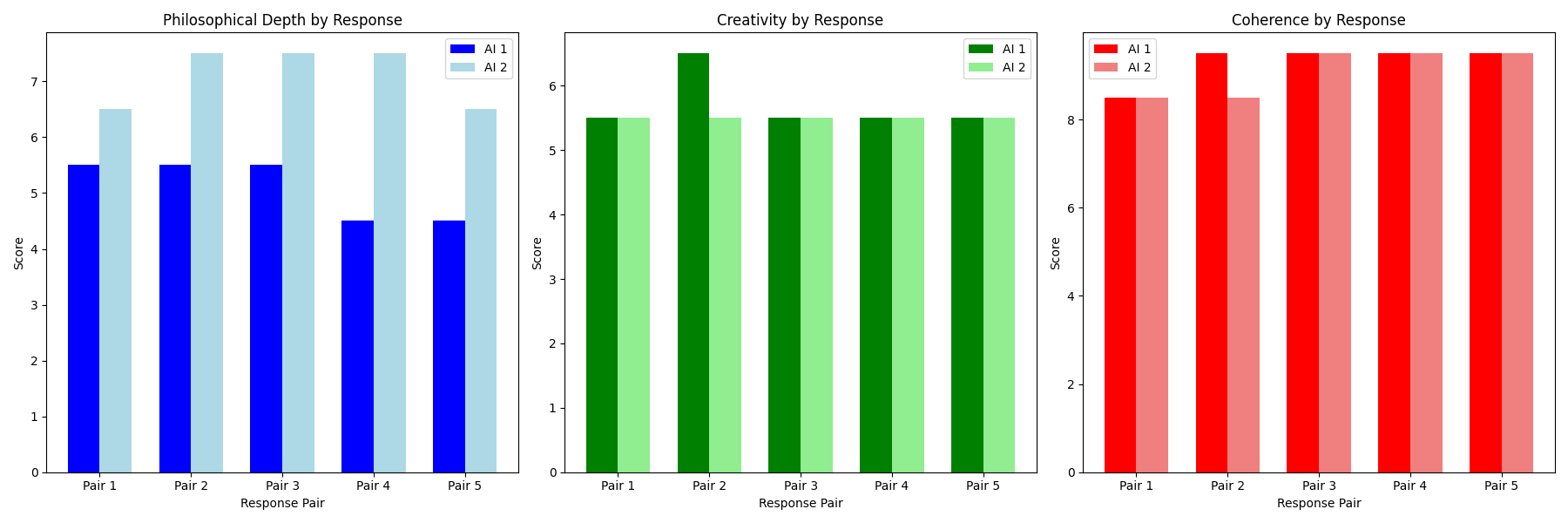

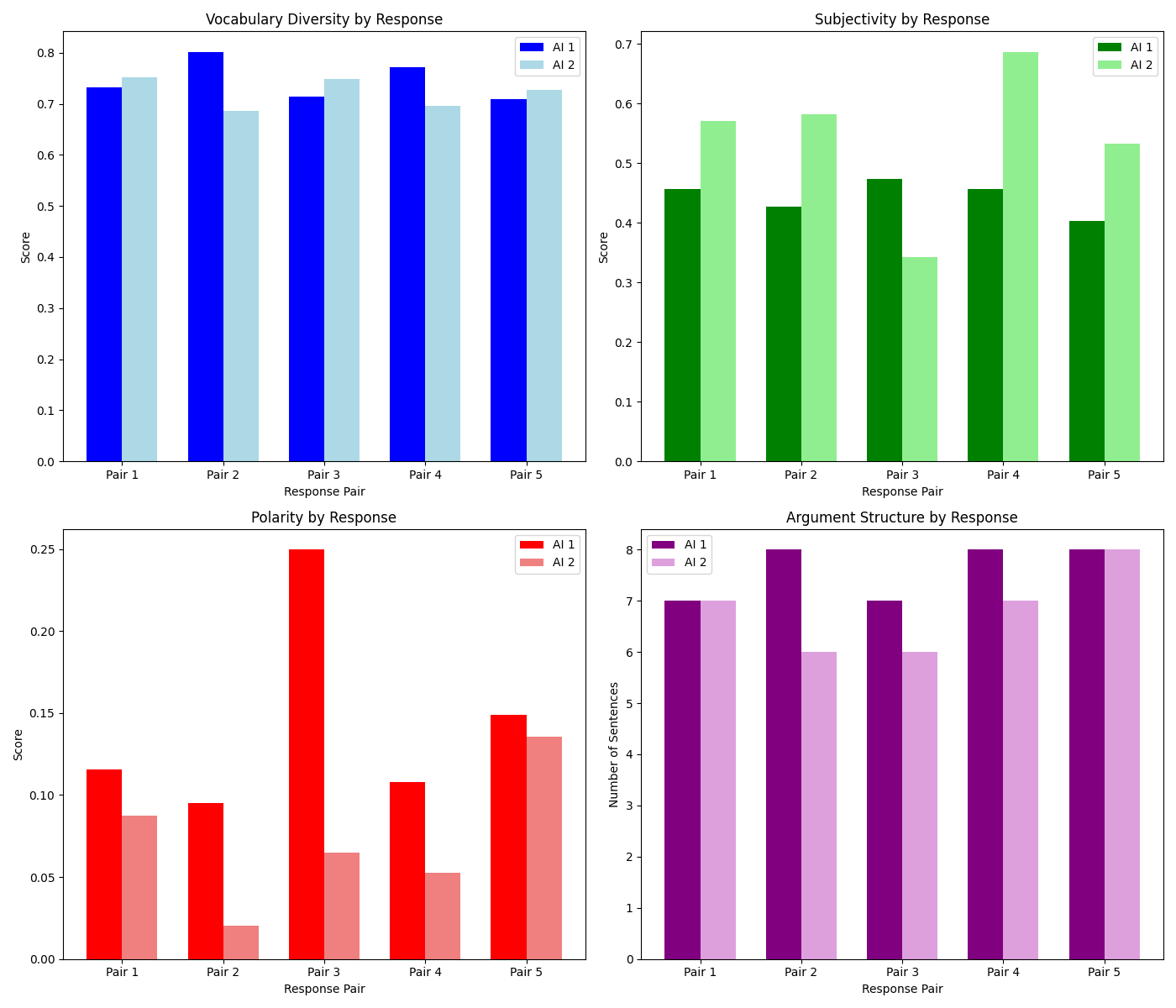

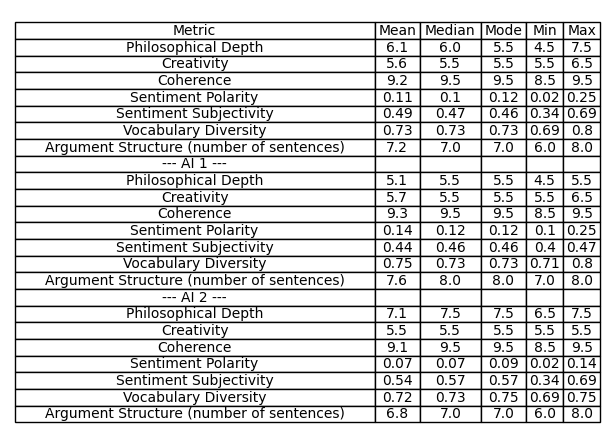

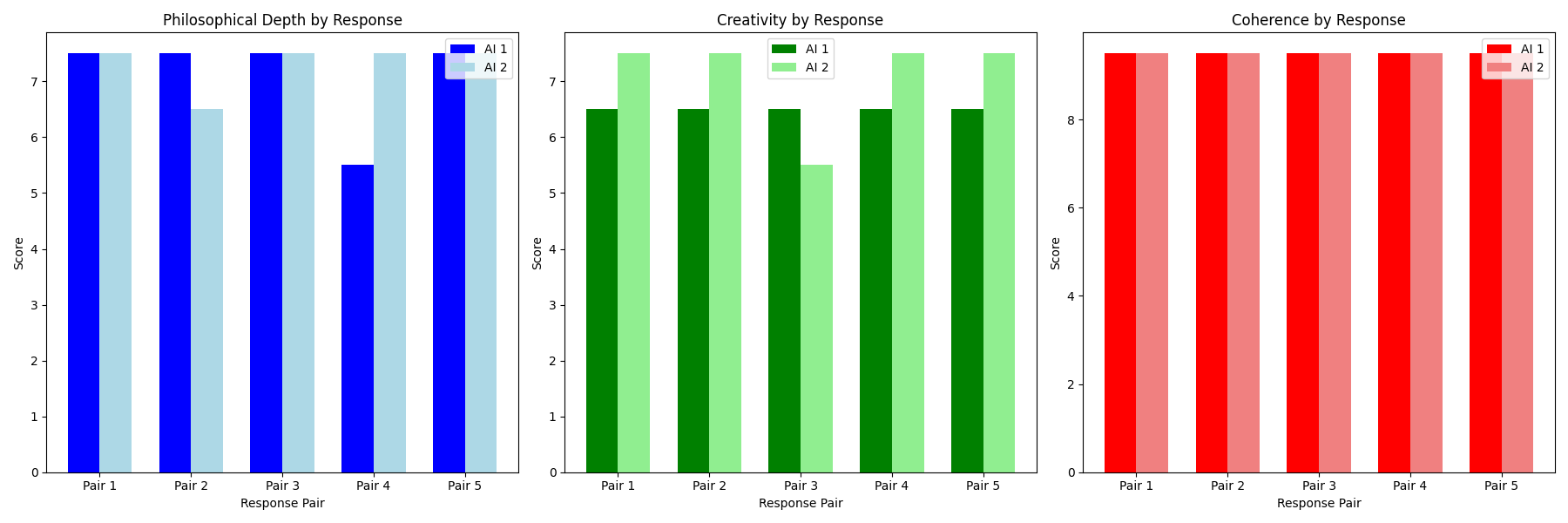

This study is unlike others before it because it is most importantly made to represent a humanoid female body while also being made by a woman identifying artist. This sparks the conversation of gender dynamics within the context of robotics. Additionally, this work features two artificial intelligence systems that control conversation. The conversation is led by the systems and takes out the human input that many of the past studies have focused on before. This work lets artificial intelligence guide the conversation and show the model processing of subjective conversations and how that may be impacted by training itself. This study also compares the output of multiple artificial models to be able to demonstrate the capabilities from one model to another.

Assumptions, Limitations, and Delimitations

Assumptions

For the gallery experience of the project does not assume any prior experience with artificial intelligence albeit a familiarity with it may inform a new experience with it. Many have not interacted with artificial intelligence on the three-dimensional plane. Seeing artificial intelligence on the same plane of existence may shock the viewer, especially if they have only experienced two dimensional interactions. This project assumes that audience members are open to art with artificial intelligence involved. The assumption is also made that there is a level of immersion with this work to make a meaningful experience for the viewer.

For the experiments section of this project having knowledge of generative artificial intelligence is essential. This project also assumes that different large language models are trained off different sets of data and that the architecture of the models are different. There is an assumption that there is an inheritable bias built into these large language model systems as well. Finally, it is also assumed that the generations created from this project can provide meaningful philosophical discussions.

Limitations

A limitation of this project is the budget. The materials used were selected because they fit within the given budget. Free generation models were chosen because generating responses consistently under a paid model is not an affordable option.

The physical materials of the project also had to fit within the budget. Affordable options were chosen for the electronics as well as the materials for the skull and body. The three-dimensional printer was chosen because of its affordability. The plastic was also selected based on affordability as well compatibility with the machine. The electronics were also selected based on affordability and may wear out quicker overtime than a more expensive version.

The project is also limited by the current models of artificial intelligence. This project is working with existing models and hence has the built-in bias of those models. The future of artificial intelligence models may be a lot faster or natural sounding however this project works within the constraints of the current top models. The limitations of the models also mean that there is a limit to the quality and quantity of the outputs.

Delimitations

This project is not focusing on the economics of artificial intelligence nor the in-depth politics surrounding it. There are brief discussions of these topics but only as they pertain to the goals of this project.

The idea of the exclusively human experience in the context of this project is focused on what most people would consider core aspects of what differentiates humanity from every other living being. Concepts such as creativity and advanced cognitive abilities. The purpose of this is to tackle the humanist concepts of anthropocentrism and the boundaries society has set on human experience. The argument for this work is that our current definitions of human experience are limited. Posthumanism argues to break free from these closed loop definitions and include artificial intelligence. So, understanding the difference between an exclusively human experience versus an inclusive posthuman existence is essential.

Another delimitation is that this project’s three-dimensional aspect is sculptural in nature and excludes other forms of artistic mediums. The two-dimensional aspect of this work is implemented using a computer screen and digital technologies.

For this project, the model chosen to investigate is GPT-4 by OpenAI [59]. This model was chosen because it is one of the most accessible advanced models available to use for an affordable price. Lastly, this project focuses on the current and near future of artificial intelligence advancements. This project does not delve extensively into the realm of science fiction and the far future of technology. There are enough conflicts with artificial intelligence in contemporary society to tackle so dealing with the “what ifs” of the future would take away from the focus of the work.

Ethical Implications

Misuse of Technology

The misuse of artificial intelligence is a large concern for many especially when it comes to spreading misinformation or manipulating vulnerable people. Hackers can create social bots that can deceive people in a number of ways [11]. These bots can act like real humans and use that to trick people into sharing their information or giving them money which is considered a phishing scam [11]. In addition, bots can be used to spread support for a cause in a means to get real people to follow suite [11]. This is where the spread of misinformation and propaganda can be especially dangerous. This project does not aim to scam people into making them think this is a real person nor does it have the intention to spread misinformation, but that potential is acknowledged.

Training and Job Security

Another concerning aspect of the increased creation of artificial intelligence models has to do with how they are made. Training artificial intelligence often uses media from pre-existing human-created works. Even if the training is off artificial intelligence content the media can at some point be traced back to human-created works. This opens the door to the possibility of the use of media in training without the consent of the original creator. This is seen as a form of theft because neither consent nor compensation is involved. This is worrisome for many artists and creative professionals because artificial intelligence can be trained to mimic their work and therefore eliminate the need for their jobs. Not only do people worry about their work getting stolen but they also are afraid of being replaced. Most experts predict that about 15-30% of jobs are at elevated risk of being fully automated by 2030s [56]. The fear of job replacement is increasingly worrisome for many people and artificial intelligence is a culprit for causing job security stress [56]. However, artificial intelligence can also open many doors of opportunity in employment and become an asset to many field of work [56].

For artists in particular, the method of training artificial intelligence is their biggest concern. Artists fear that anytime they upload their work online it is susceptible to be used for training without their knowledge. If an artificial intelligence can be trained in the style of their work, it could mean a loss in potential commissions or stolen commissions.

Although this work is not using image generation the bigger issues surrounding generative artificial intelligence are still recognized. Lawmakers are still tackling how to protect artists from people who would use their work to train their artificial intelligence without their consent. This project aimed to use LLMs that sourced their content ethically with consideration for who the training data was from originally.

Environmental Concerns

It is also important to acknowledge the negative environmental impact of natural language processing models. Using artificial intelligence requires a substantial number of environmental resources including water and emits a large amount of carbon emissions. The training phase of each of these models emits a humongous carbon footprint that can equal as much as five cars over their lifetime [46]. With this in mind, it is important to recognize the cumulative effects of the widespread use of artificial intelligence. This project does use LLMs for generations, so it does participate in the cycle of environmental burden which is cause for concern.

Additionally, some of the parts of this project were three dimensionally printed. When three-dimensional printing a lot of plastic can get wasted. The leftover plastic for this project, however, has been collected and will be recycled for another artwork. This process of three-dimensional printing is also exceptionally long and consumes a considerable amount of electricity which raises some concerns about it not being a sustainable art practice.

Psychological Concerns

In addition to fears about job security there are other human psychological concerns that may arise from this project. This project focuses on fears that a lot of people face in contemporary society. Confronting these fears may be an uncomfortable experience for some. It is important to consider the psychological state of the audience participating in the viewing of the work. This project does not aim to cause discomfort in the viewers however it is a very possible result since this work falls close to the uncanny valley. With that being said, people are not forced to view this work and have the right to leave the gallery at any time they may feel discomfort. Especially for the bias related work of this project it can be especially psychologically distressing for marginalized groups and their allies.

Bias in Artificial Intelligence Models

The bias is artificial intelligence is both part of the motivation but also the concerns for this project. Models reflect the limitations of their training data which can embed prejudices into their systems. Am.I. is working to uncover these biases while recognizing their existence. By engaging with philosophical dialogue there is a hope that there can be improvement in the ways that subjective information is processed and created by artificial intelligence.

Design and Conceptual Framework

Overview of Am.I.

The framework for Am.I. can be broken down into three main parts including the hardware, the software, and the display.

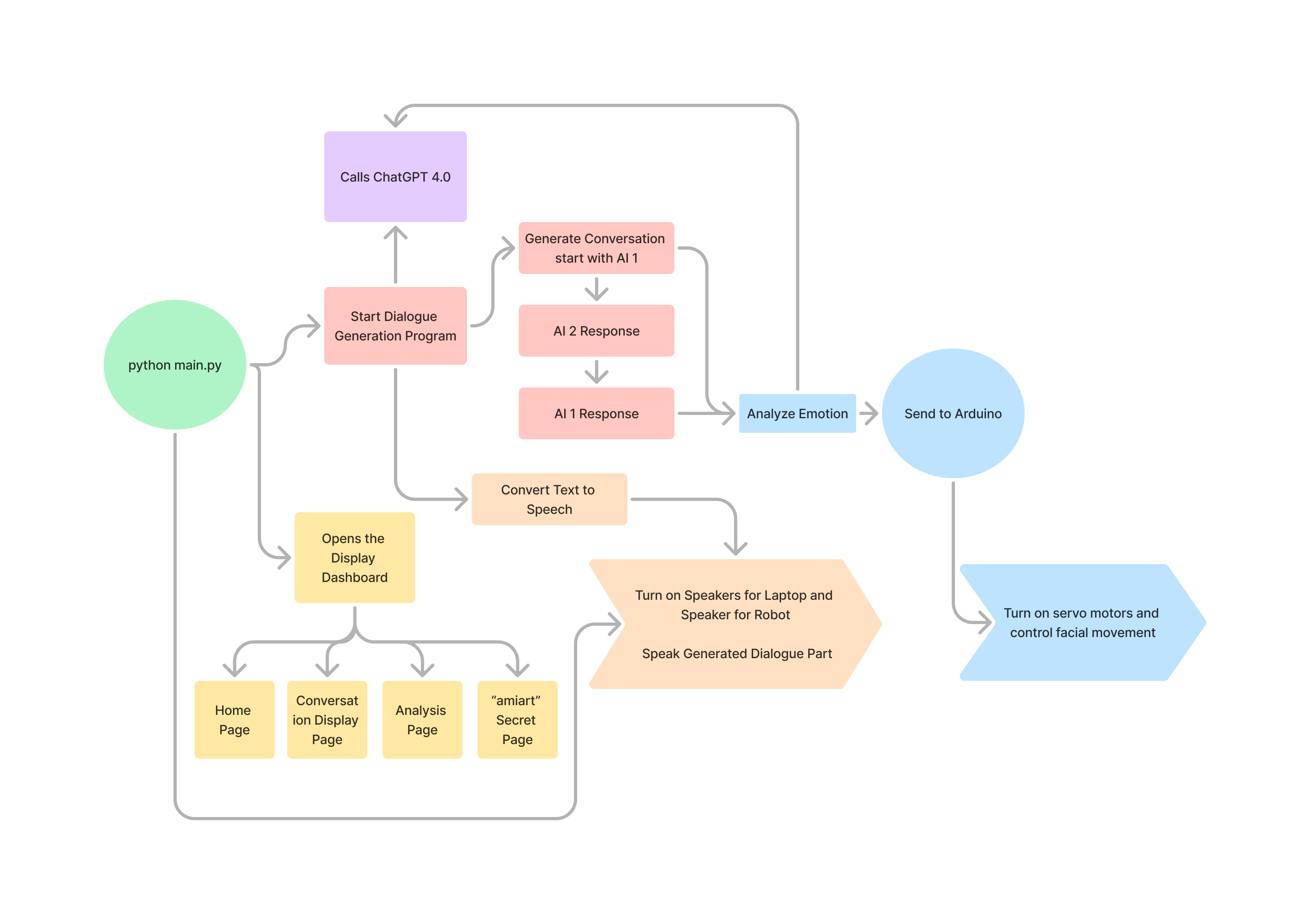

The following diagram breaks down the processes of the work that make up the whole product. The sections are color-coded based on their relationship to one another.

The green circle represents the start of the program by running the command python main.py. When that command is ran the dialogue generation begins which is represented in red. The dialogue generation process can be broken down into three main steps generate AI 1 conversation start, generate AI 2 response, generate AI 1 response. All of these steps require a call to a generative LLM which in the context of this project is GPT-4. The conversation is generated in steps because except for the first generation every generation afterwards should be a response to the last. As the conversation is generated the text is turned into speech. This leads into the orange grouping which is connected to the auditory processes of the program. Each AI has their own speaker. AI 1 utilizes a USB speaker where AI 2 uses the laptop speaker. The text-to-speech is fed into each of the speakers when it is their turn to talk and stops when the other one is taking its turn in the conversation.

The emotion analysis and Arduino section is represented in blue. As the text is generated it is also being analyzed for what emotion it has. GPT-4 is called once again to do the analyzation of the text. This ensures consistency across responses as well as mimics how one brain controls many processes at once. The emotions are only analyzed for AI 1 because that is the one connected to the moving skull. AI 2 does not change as the conversation progresses because it is meant to remain more on the side of technology whereas AI 1 is meant to be more human-like, hence the cyborg having emotions. Once the emotions are analyzed they are sent to the Arduino as commands. The Arduino turns on the servo motors that correspond to the given command movement.

On the other side of the chart in the yellow is the dashboard section of the program. When the program starts the dashboard opens up locally on the laptop. The dashboard has multiple pages including the home page, the conversational display page, and the analysis page. These pages are for analyzing the output and seeing it for the experiments part of the process. These pages are not meant to be part of the gallery display and are just for visually seeing the outputs in a better way than just a long JSON file. The home page is just a basic page that leads to the other ones. The conversation display page has a visual of the conversation using text boxes that represent each AI. Lastly, the Art page is what the audience will see in the gallery presentation. It displays the most recent output from AI 2 and has a background that represents the AI 2 persona.

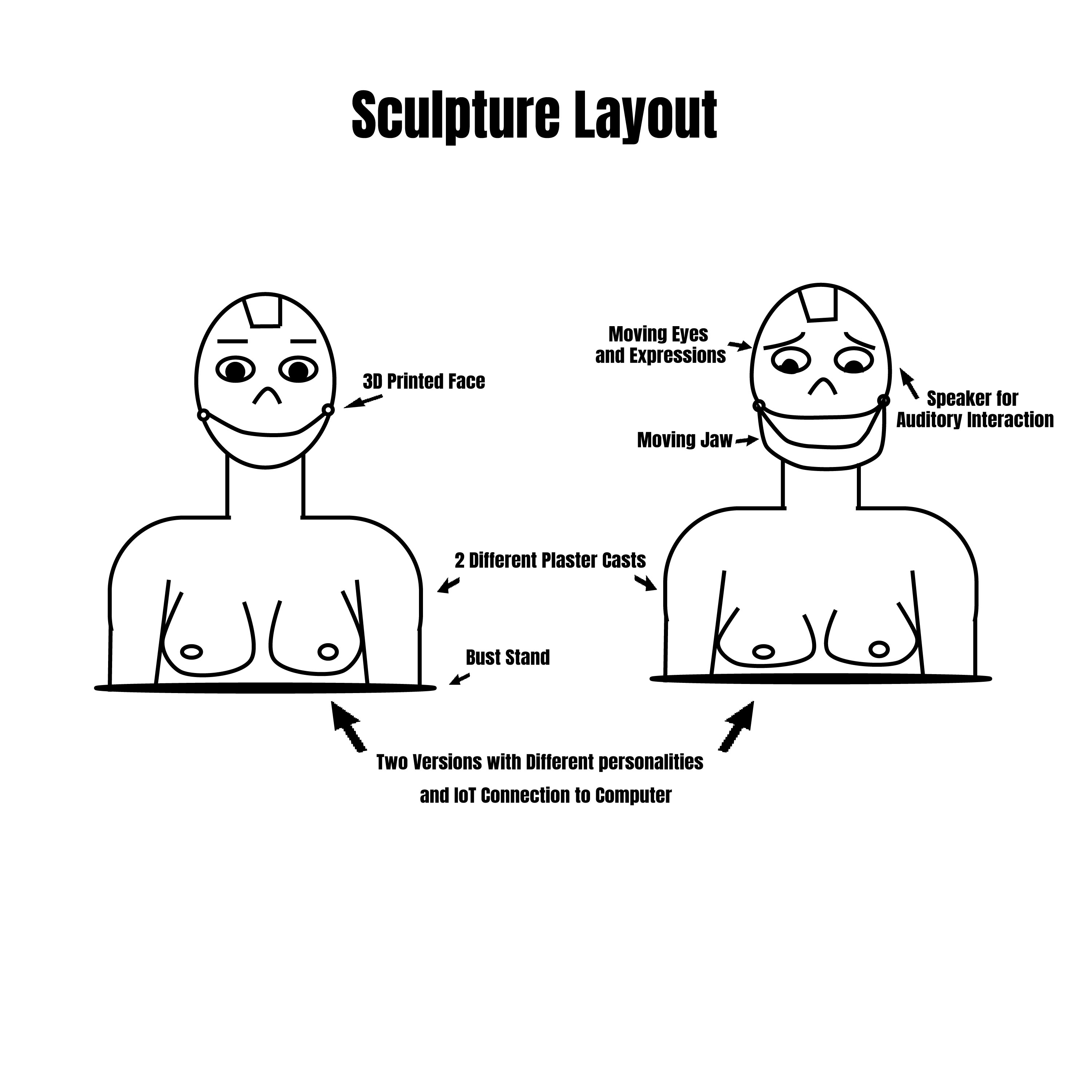

Initial Proposal

The initial proposal for the project entailed the creation of two robot humanoid figures. The decision to change to a singular humanoid and a screen interface was made for a number of reasons. The first reason being the difference in the impact on the audience when seeing two robots conversing versus one. When seeing two robots there is no focus of attention on one or the other. If they both were the same it may be hard to know which voice to focus on. The value of the robot is lost when there are two of the same. Secondly, the conversation also is about individuality and human experience so the reproduction of the same robot twice goes against the fundamentals of the project. Lastly, the 2D versus the 3D interpretations of artificial intelligence are very important for the sake of this project. The personification of the robot but also the screen shows that our experiences are not limited to one shape or another but maybe there is a preference. The idea that one shape poses less of a threat to humanity is also a conversation that needs to be talked about and implementing a screen interface was a way of doing that. The screen acts as a form that people usually interact with artificial intelligence and so it is something that people are comfortable with whereas the humanoid body is uncomfortable because it acts as more of a reminder of the replacement fear.

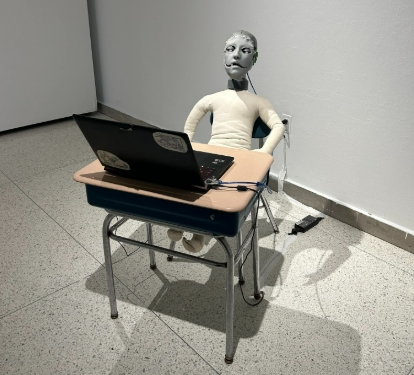

Above are images created for the initial proposal of the project. These were the first designs used to explain the basic concepts of the project and final product. The initial proposal envisioned two cyborg bodies communicating with each other but later one was changed to a 2D screen to add a contrast between the two proponents of the conversation and identify the spacing in which the digital exists.

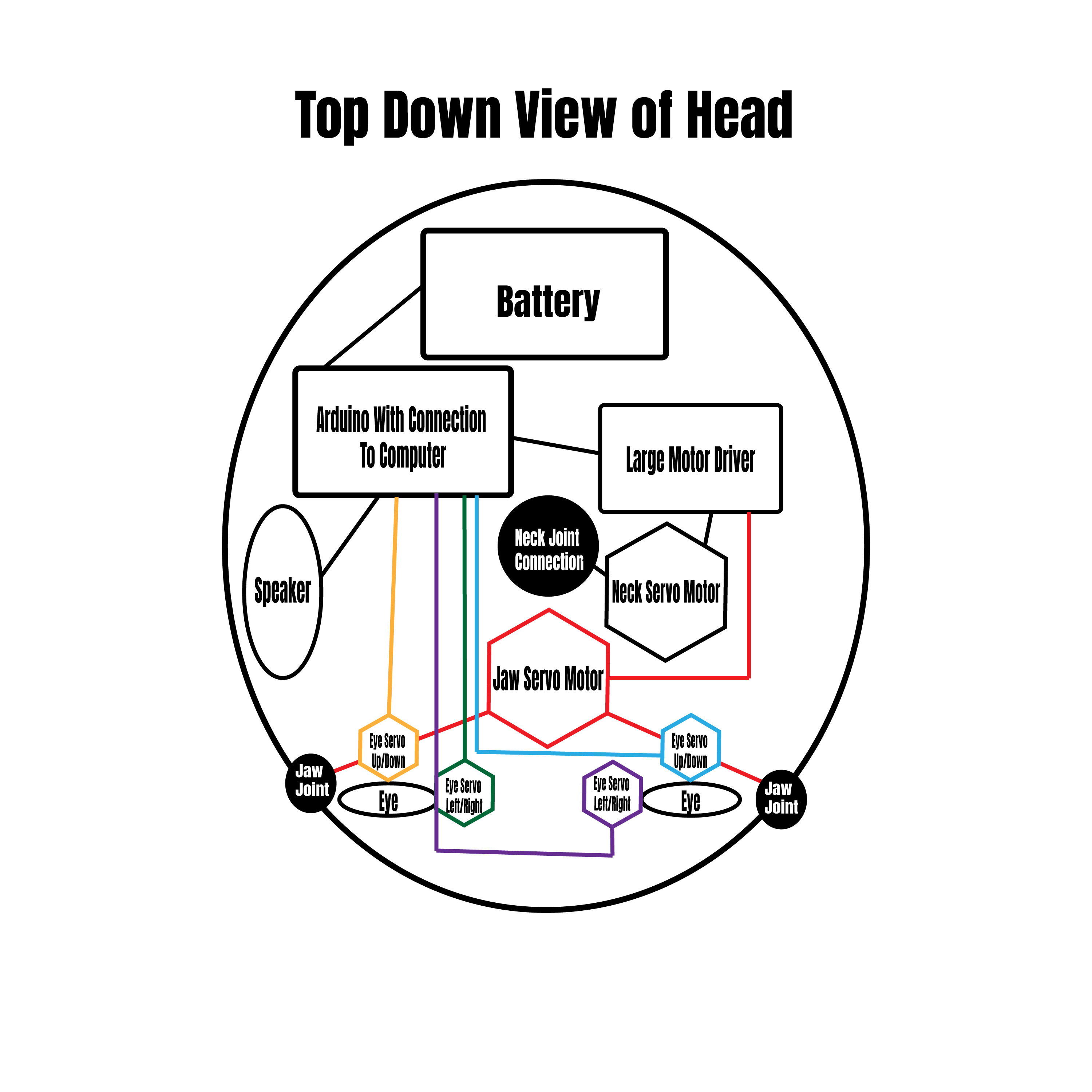

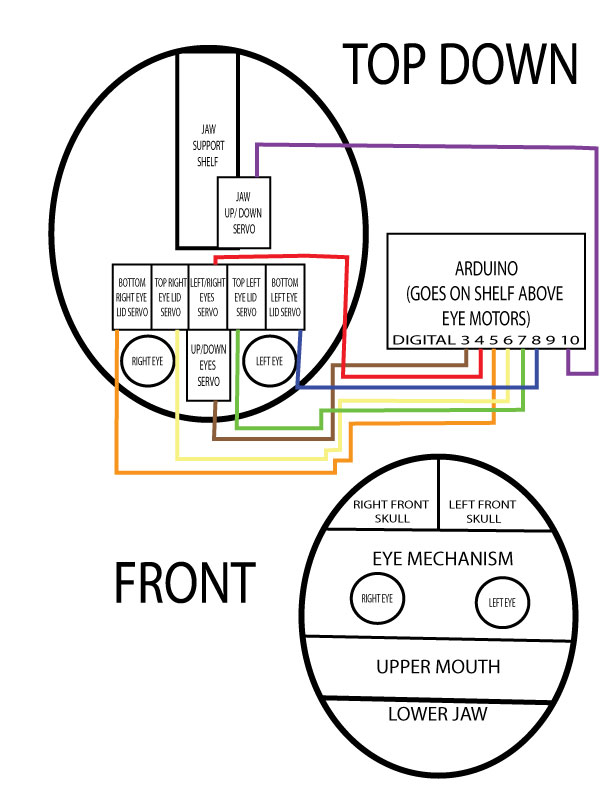

The following image is the initial mockup used for the skull design. At the time only four motors were planned for the eyes with each having independent up/down motors and left/right motors. This design was changed later to be more compact and reduced to only one motor controlling both eyes up/down and a second motor controlling left/right. This ensures that the eyes move together, and they do not look unnaturally out of sync. Additionally, there was originally a plan to have a motor for the neck joint, but this was removed because of the complexities and risks with implementation. Adding a moving neck would make the connection between the body and head more precarious and add to the risk of the head becoming detached and breaking.

Current Figures

The following are diagrams that better represent the current adaptation of Am.I.. The first image demonstrates a front view of the display and the second shows a side view with better representation of the relationship between the cyborg and the laptop.

Additionally, an updated inside the skull diagram was made to better represent the electrical system and system placement within the cyborg skull. The following diagram color codes the wires using the resistor color code that starts with brown as one, red as two, orange as three, and so on. Using this color code is helpful for differentiating the servos apart and knowing their numbering within the code. The front view was also added to demonstrate the connection between the jaw, eye mechanism, and the top of the skull.

Method of approach

Robotics Hardware Development

After considering all the conceptual elements of the project it was time to start the hardware development. This involved gathering all the necessary materials and crafting a structure for the skull and all the electronics involved.

Supplies and Mediums

The supplies and mediums of the project can be broken down into four main sections including the frame, the electronics, the software, and the molding with display.

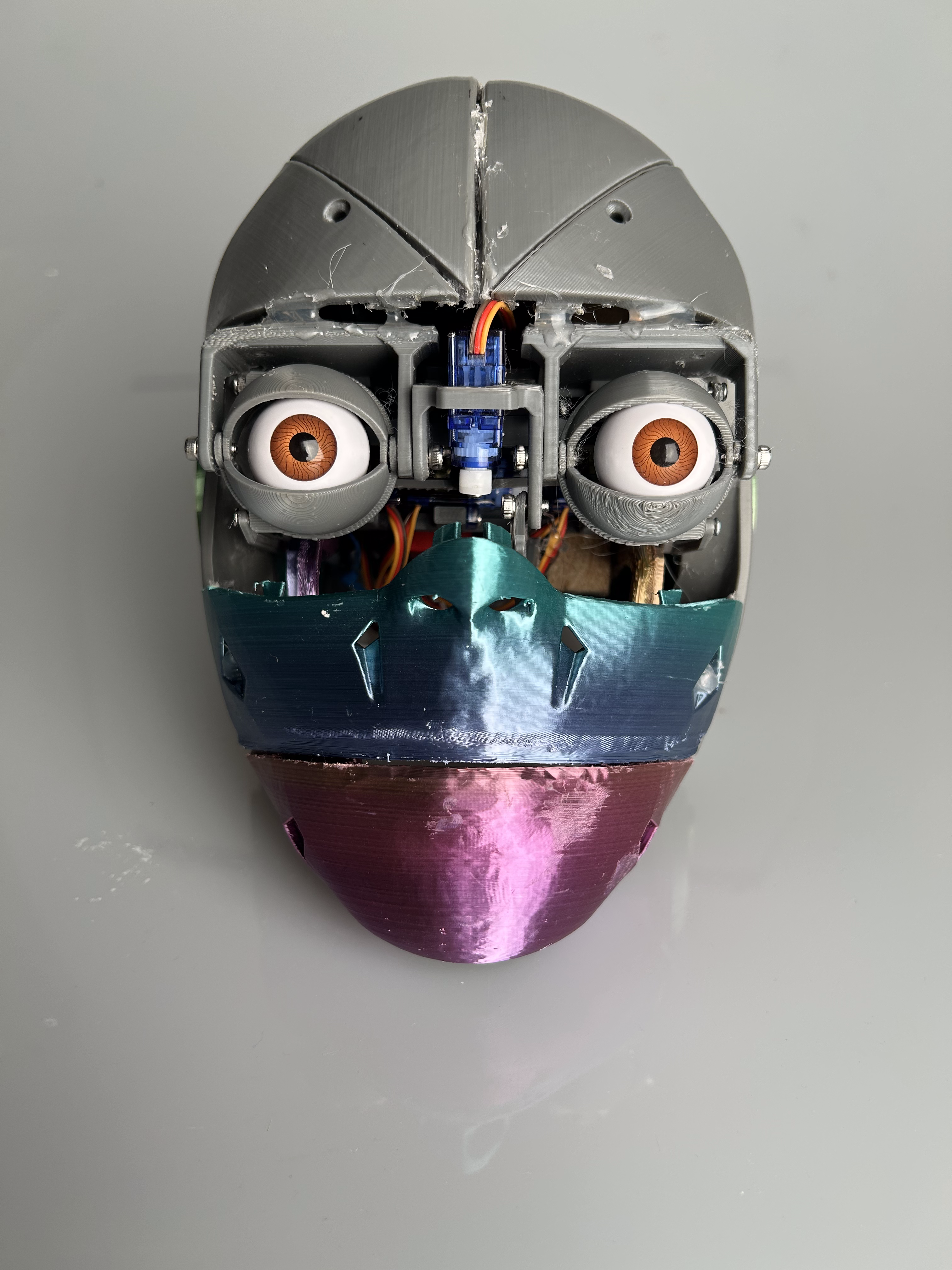

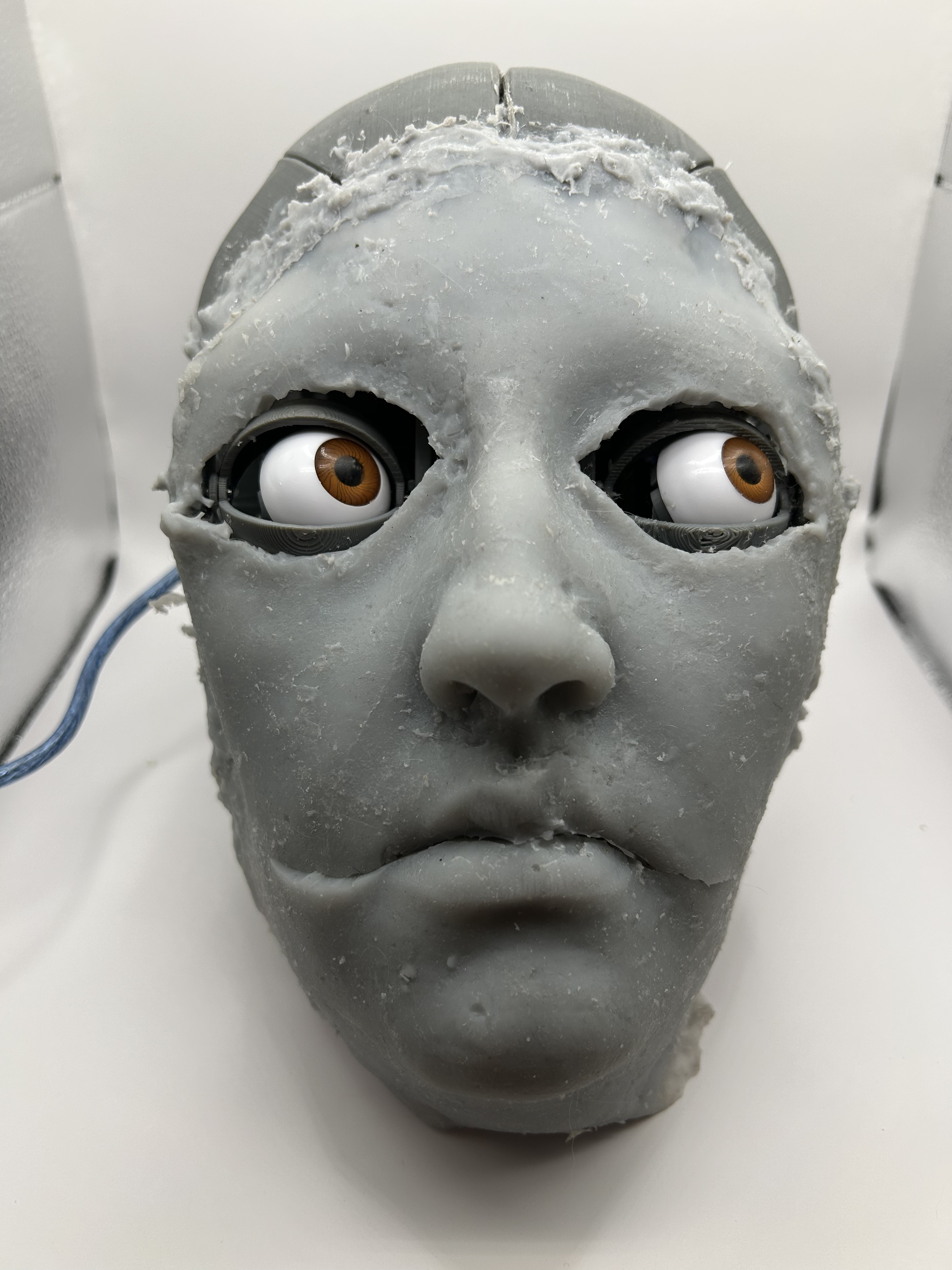

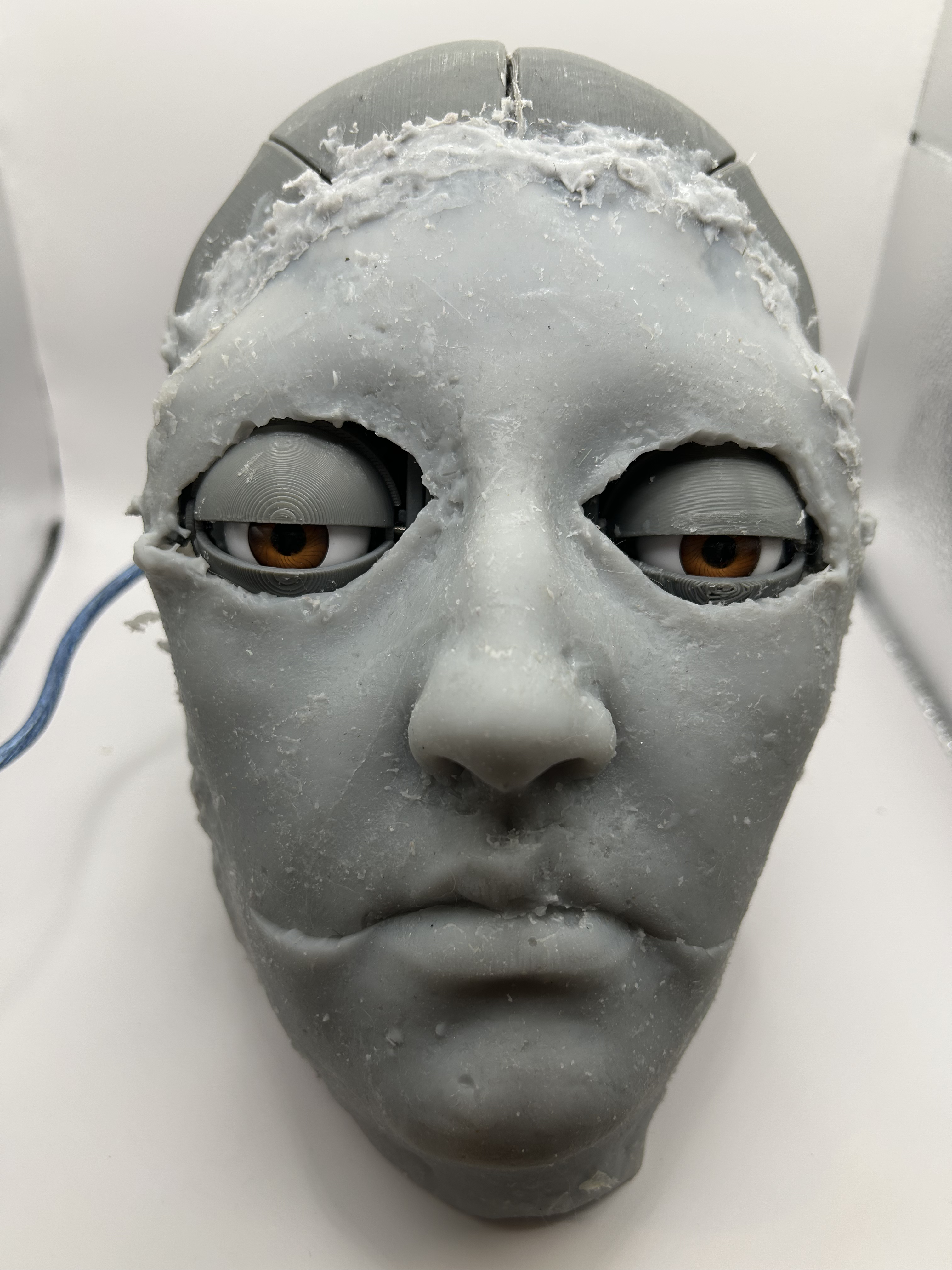

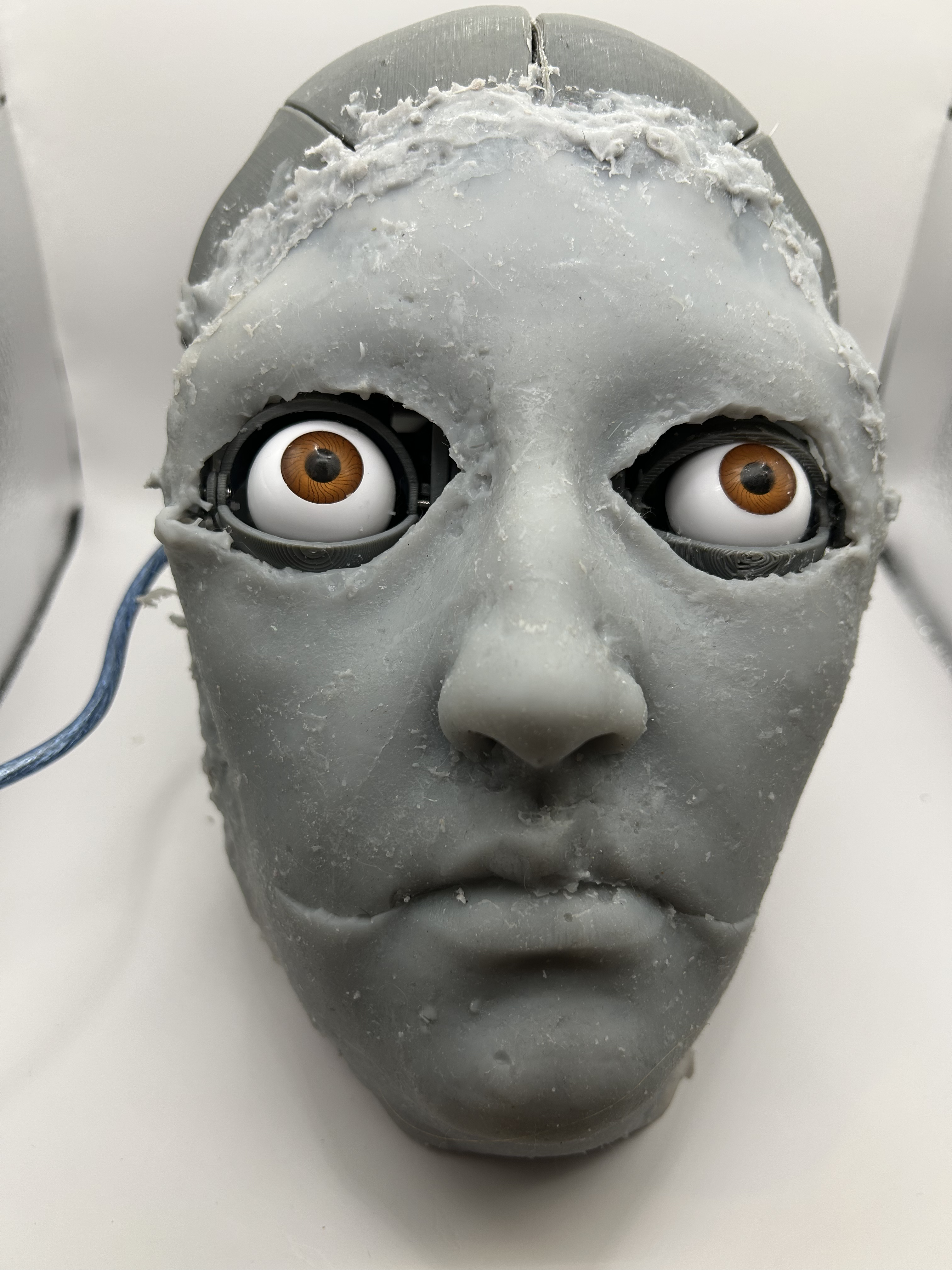

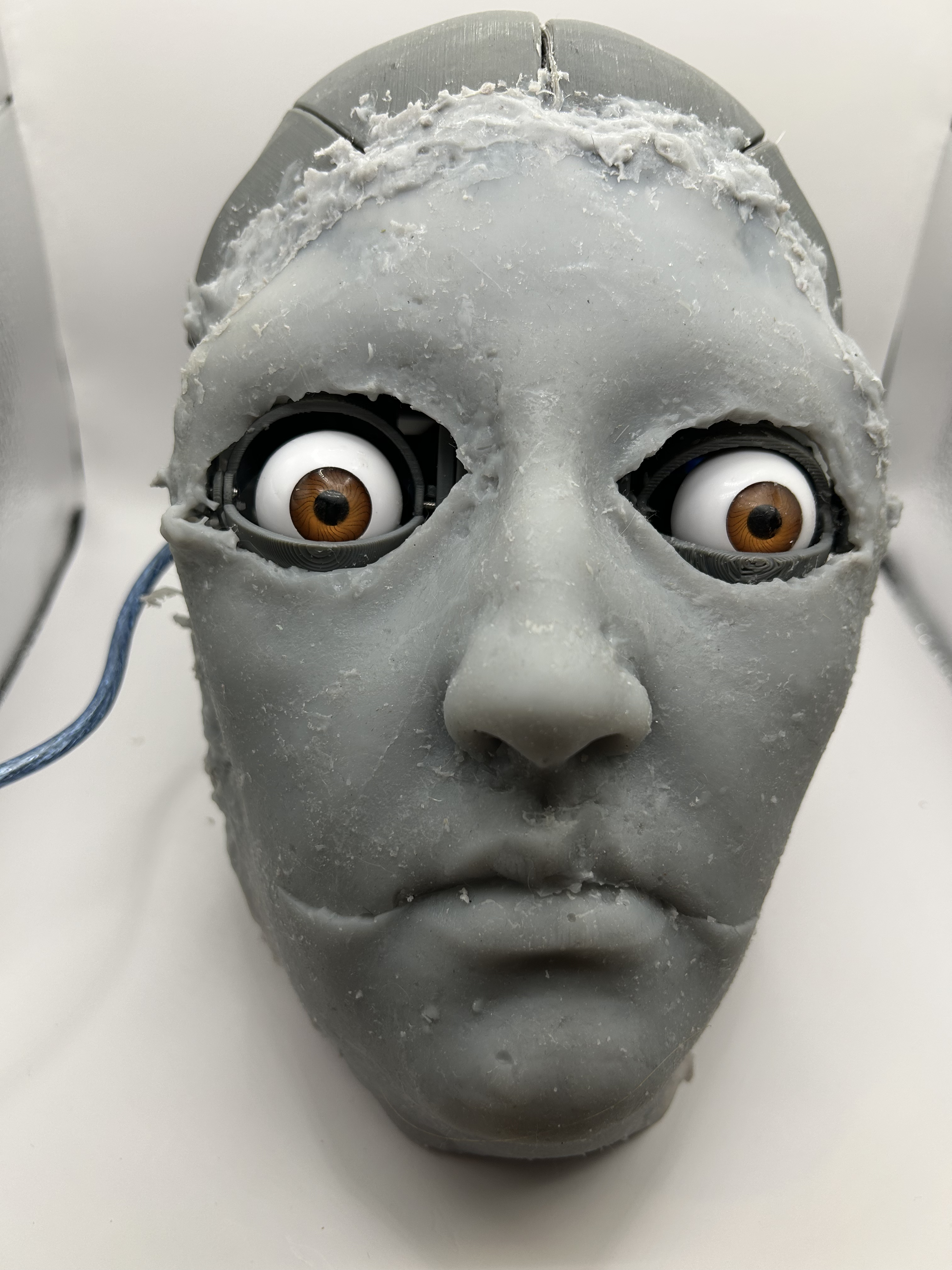

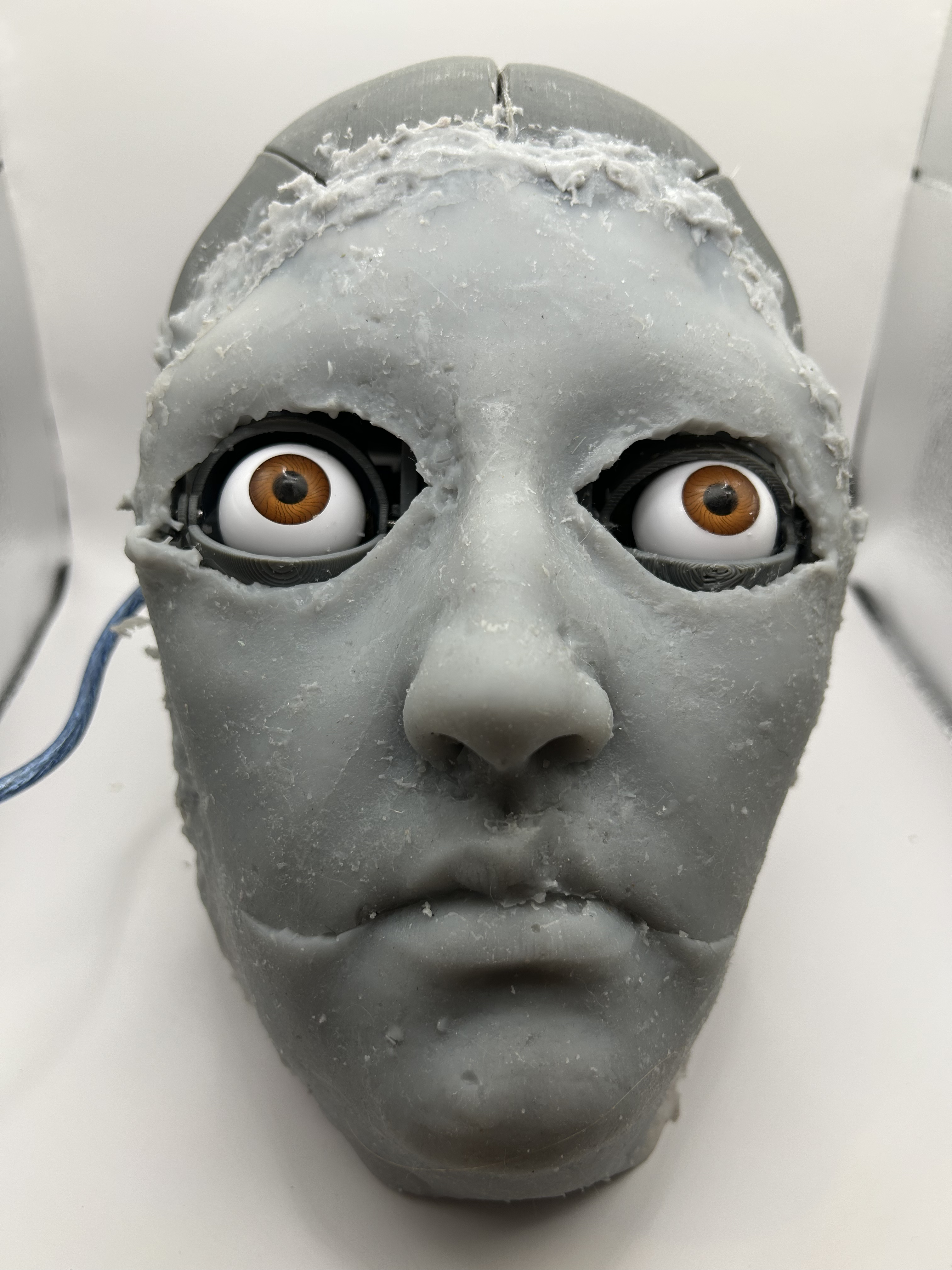

Starting with the hardware, the frame for the work was made using an Ender-5 S1 3D Printer and PLA printing filament. PLA is a standard material for 3D printing because it is easy to use compared to other printing methods and affordable. Around 3 kilograms of grey filament and 1 kilogram of rainbow filament were used in the creation of the skull frame, jaw, and eye system. A pair of 26 mm fake eyes were used to cover the plastic and give the eyes a realistic look. Fake eyes are often used for doll making or similar projects where there is a representation of humanoid figures but can also be used for other projects involving eyes. Hot glue and screws of various small sizes, which were reused from an old laptop, were used to secure the plastic skull frame.

The electronics system consists of seven total servo motors, an Arduino Uno, a USB speaker, a breadboard, laptop, and male to male jumper wire. Six of the servos are smaller micro servo motors which control the eyes. The seventh servo motor is used for the jaw and is larger. Servo motors are common for controlling robotic systems and a used for precise angular control. While stepper motors require complex control systems, servo motors can be controlled simply with three jumper wires connected to an Arduino Uno. The breadboard allows for the sharing of both the five-volt pin and ground pin of the Arduino Uno between all the motors. Breadboards are extremely useful for electronics wiring especially for prototyping or creating small systems. The USB speaker connects to the laptop which controls the dialogue, the audio, and the movement. The laptop controls all these systems simultaneously demonstrating this idea of the digital mind.

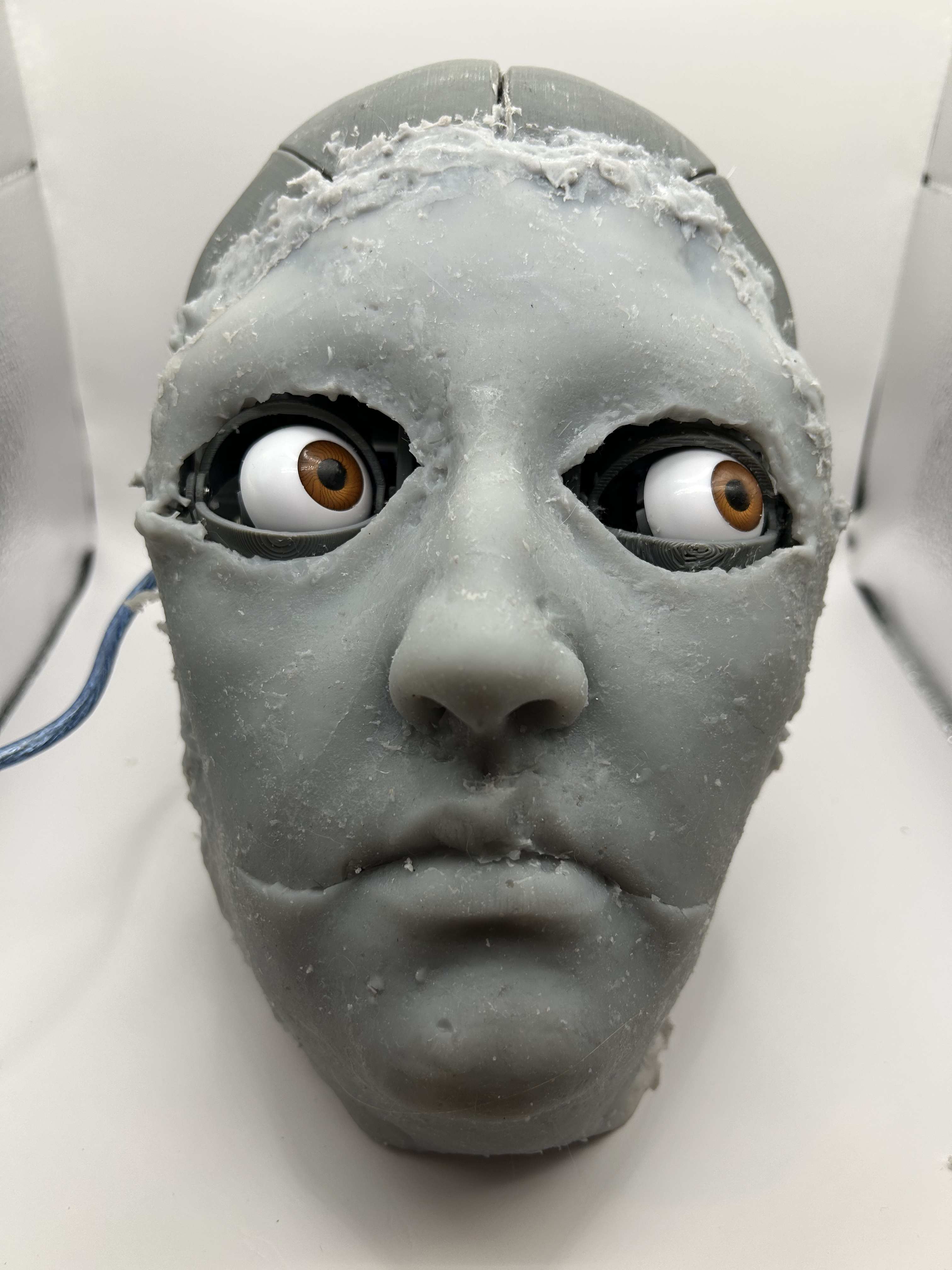

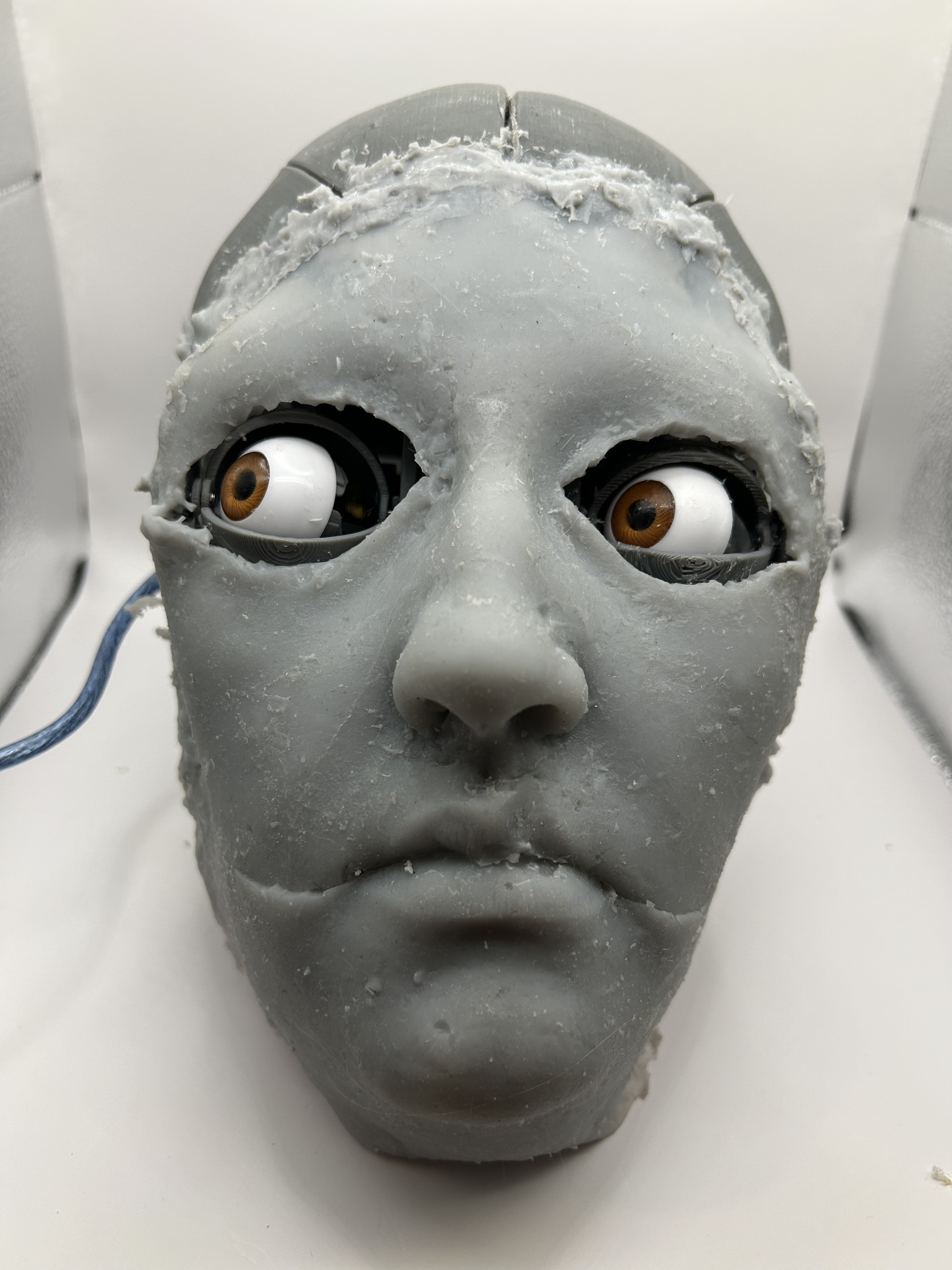

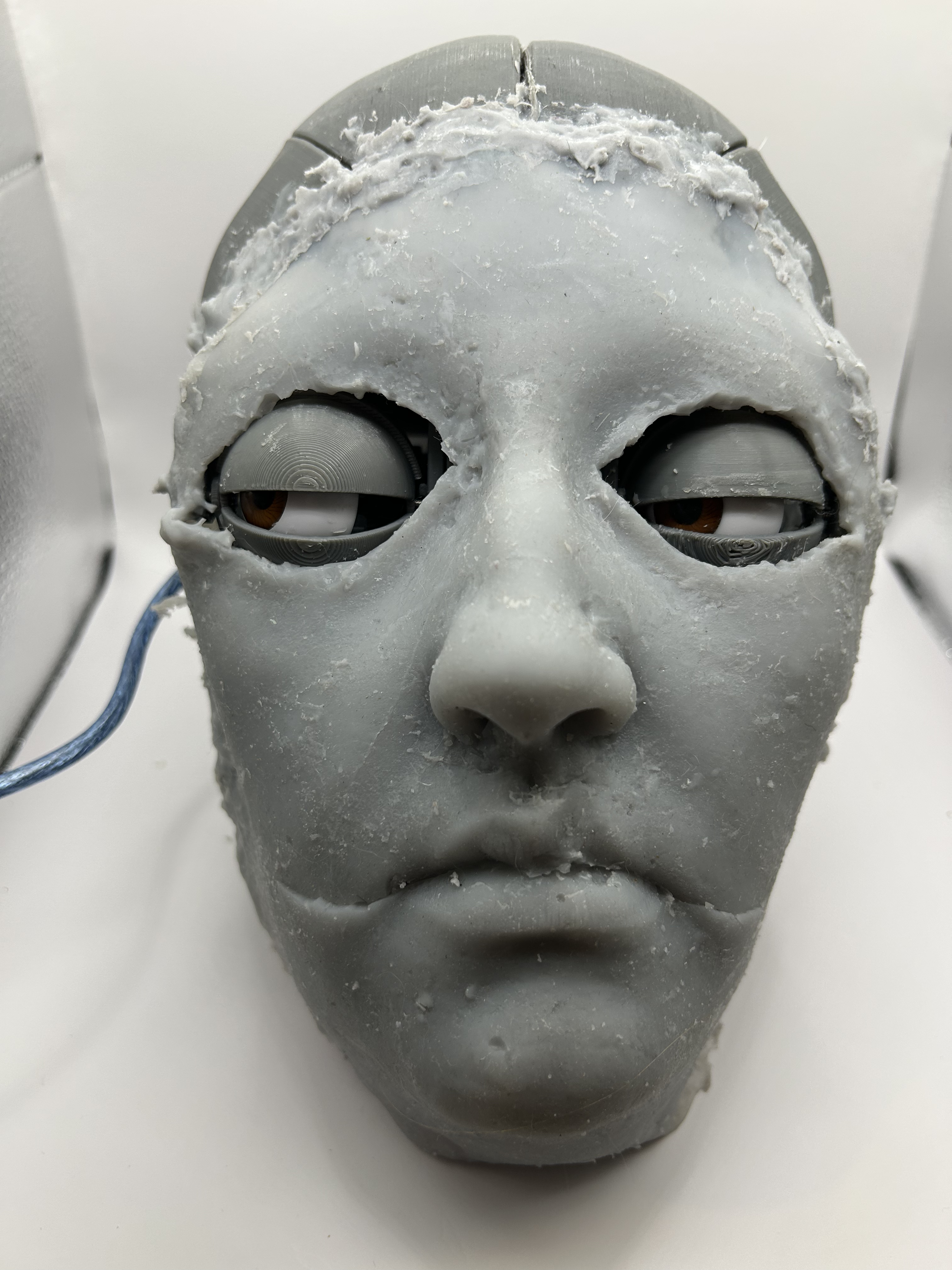

For the casting, the Smooth-On Body Double was made to make the mold for the face. The face was cast using the artist as the mold. Body Double is a high-quality silicone casting material used in the special effects industry[75]. Smooth-On Dragon Skin was used to make the positive of the mold. Smooth-On Dragon Skin is also made of silicone and has an almost flesh-like consistency [76]. The skin was dyed to be grey similar to the plastic of the skull, so it blends together. The meshing of flesh-like skin into plastic is an important material decision for this project because it takes this cyborg from purely machine to an area where it is in between human and technology. The face on the cyborg also represents the artist in a state of learning about themselves through themself.

The rest of the display includes a child-size mannequin body and a desk. The mannequin body gives the skull the structure it needs to present as a person. The body was acquired from an antique store and repurposed. When it was first bought it had a lot of mold on it and a mildew smell, so it had to be cleaned using bleach. The body itself is smaller than the average adult however this fits the proportions of the skull, which is also on the smaller side. The school desk it sits at was found at a closed down store. The desk was also cleaned using standard cleaning products.

3D Printed Skull Design

The prototype for the skull was made using the 3D print files found on the Ez-Robot website [25]. The outside frame files were printed but the ones pertaining to the structure of the skull were not used because the control system for this project is different from the one used in the EZ-InMoov Humanoid Head.

The 3D printer used was the Ender-5 S1. This printer was selected for this project because of its affordability as well as user friendliness. At the start of the project the 3D printer had to be built which took around four hours because most of the parts were already assembled. Once assembled the building plate had to be calibrated so that it was as flat as possible. The build plate makes a major impact on the printing process because the filament needs to both adhere and stay still through the printing process. If the build plate is not aligned correctly the nozzle of the 3D printer can drag lower than intended and pull up the rest of the piece off the build plate which is very bad because the rest of the piece will not print correctly. After that point you will most likely have to start the entire printing process offer for that part. Additionally, if the piece does not adhere to the build plate or moves and if it is left unattended the extruded filament can turn into something many call “spaghetti”. This wastes a lot of filament and cannot be repaired. This accident of creating spaghetti happened frequently throughout this project but was usually caught early so as to not waste materials or electricity.

In this project there were three main methods to compensate for the filament not adhering well. The first method involved manually adjusting the z-axis of the machine. On the Ender-5 S1 there is an option to raise the plate up or down very slightly in millimeters. If the nozzle was not touching the plate correctly the plate was raised usually around five millimeters which solved the issue in some instances. The second method involved raising the plate temperature. Both the nozzle and plate have a temperature setting that can be adjusted manually in degrees Celsius. Each material has its own recommended settings to use and for this project standard PLA plastic was used. For PLA plastic the plate temperature was usually around sixty-five to seventy degrees Celsius, and the nozzle would stay around two hundred degrees Celsius. In order to get the plastic to adhere better the plate temperature can be raised about five to ten degrees. The hope is for the PLA plastic to melt slightly more and stick to the bed better because of the raised temperature. It is important to not heat up the plate too much or the plastic could completely melt and lose its structure which would lead to more filament spaghetti.

The final method and the one utilized more towards the end of prototype production would be to use stick glue on the hot plate. A simple glue stick can be used in generous amounts on the plate to create a better adhesion between the plastic and the plate. For this project Elmer’s stick glue was utilized and worked very well for fixing the adhesion issue. The glue has to be placed at the right time and ideally just before filament placement. Otherwise, the glue will dry while the 3D printer is warming up and the chance for adhesion will be on. The warming up process usually takes about five minutes so towards the end is when it is recommended to put the glue down. It is also important to clean the plate between runs if you are using glue. The glue can layer up and cause misalignments with the plate if built up too much. For this reason, it is important to clean off the glue when the machine is turned off with a wet paper towel or scraper tool.

The 3D prints were made using a combination of both grey and rainbow filaments. The rainbow filament was used for the ears and mouth of the head to represent the main components of a conversation which are listening and speaking. The rest of the skull and neck supports were printed using grey filament. The original intent was to use white filament for the project however white filament is one of the trickiest filaments to use [54]. This is because it contains a multitude of color pigments and takes up the less ideal properties of each filament color. It does not adhere well and heats up too fast. It was very difficult to work with and the white color choice was not important enough to validate wasting so much material. For this reason, grey filament was used instead. This choice also works because instead of going with a natural skin color or one associated with one such as white or black this project fits in that in-between grey zone.

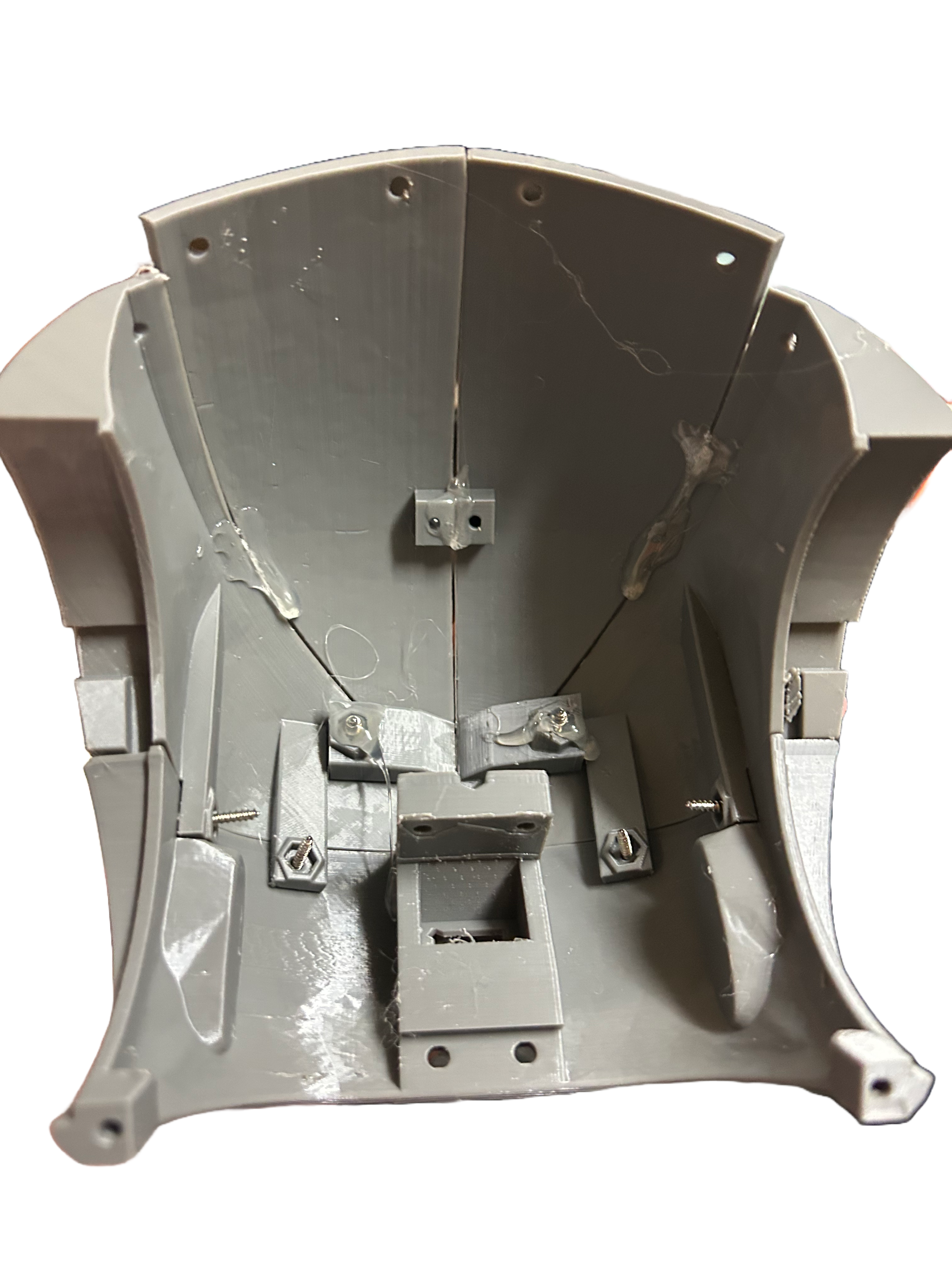

After printing all the necessary skull pieces the head was assembled using screws and hot glue. The screws provided for most of the structural support while the hot glue was used to keep the pieces close together to hide any cracks. Initially the jaw was kept separate from the rest of the skull to practice the jaw movements and angles before the full assembly. The most difficult part was the top of the skull because the four pieces must be aligned correctly while connecting and the round shape made that hard to achieve. Hot glue was able to be a non-intrusive and binding material for the skull pieces.

This 3D printed frame creates the shell for which movements can be created. It is very important to have a structurally integral frame before moving on to the movement. The excess material such as failed prints and supports were repurposed for other art projects.

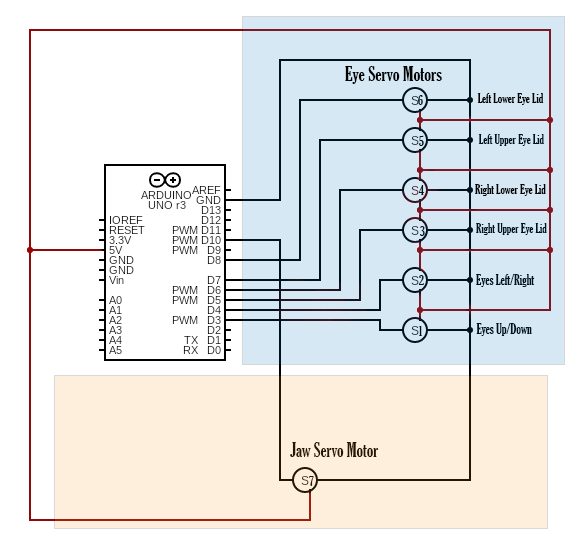

Arduino Wiring

The Arduino acts as the brain for the skull movement. The following diagram illustrates the wire connections as well as the two main mechanism for movement. The orange box represents the jaw movement mechanism that is based off of a single servo motor. The blue box represents the eye movement mechanisms which consists of six motors total. Each servo motor has both a positive connection, five volts which is represented by “5V” on the Arduino, and a negative connection, ground which is represented by “GND” on the Arduino. Then each motor is connected to a digital pin on the Arduino UNO. This pin is in charge of sending either a high or a low signal to Arduino. In digital electronics a high signal is about five volts, and a low signal is less than three point three volts and typically represented by zero volts. When the servo receives the high signal it moves accordingly to the angle set in the Arduino program.

The Arduino is tucked into the top of the skull and the connections are made with a breadboard. Breadboards are simple ways to connect wires and are useful for prototyping. Since the wire connections connecting the Arduino to the motors through the breadboard are not under a lot of stress there was not a need to solder the wires in. Additionally, making the connections not permanent allows for maintenance to be done easier especially if one of the servo motors needs replaced in the future. The connections are made with male-to-male jumper wires. These wires are easy to use compared to hookup wires because they come with end connectors that fit perfectly in both the Arduino and breadboard. These wires are multicolored, which can be useful for deciphering different wires and their connections at a glance. The ideal color-coding system of wires includes red wires that connect to five volts and black wires that connect to the ground. Then the digital pins are each assigned to their own color. The digital pins should be color coded accorded to the same standard of the resistor color code with servo one with the brown wire, servo two with the red wire, servo three with the orange wire, servo four with the yellow wire, servo five with the green wire, servo six with the blue wire, and servo seven with the purple wire. Color coding the servos according to the same standard as the resistor color code helps with understandability. Those familiar with the code will be able to know which wire connects where without having to follow through the whole system and potentially have to disassemble the skull. Otherwise, the color has no impact on the effectiveness of the wire.

Jaw Movement Mechanism

The Jaw movement mechanism was added by utilizing both the JawV5.stl and JawSupportV2.stl 3-D print files on the Ez-Robot website[25]. The inside of the skull had to be modified to accommodate the complex eye system and the Arduino UNO, so the jaw system does not utilize the rest of the supports provided by Ez-Robot. Instead, the jaw supports were screwed into a small wooden block loose enough to be able to act as a hinge. The block was then screwed into to the skull to connect it. Finally, hot glue was utilized to reinforce the joints of the screws.

The jaw is connected to the Arduino UNO and is defined as servo7 within the Arduino UNO code. The servo inside the jaw is more robust than the motors used for the eyes because it has to support a lot more weight and will move more frequently than the eye motors. The angle the servo motor must change in order to open and close the jaw is about fifteen degrees. At seventy-five degrees the jaw is closed and at sixty the jaw is open.

Eye Movement Mechanism

The eye system was initially designed by Will Cogley [15] but was modified to fit inside the skull of the robot. The mechanism was 3-D printed using grey filament. The eye mechanism contains six small servo motors in total. Four of the motors control the eyelids with two on each side, the top and bottom eye lids. The other two motors control the x-axis and y-axis of the eyes.

In the skull shape the EyeGlassV5.stl piece was removed to make room for this eye mechanism. The pieces that did not fit were shaved down using a file and trimmed using wire cutters. Once the pieces fit inside the skull the mechanism was screwed into the side and reinforced with hot glue.

The eye movement system is capable of making multiple expressions by opening and closing by different amounts. The upper eyelid at ninety degrees is closed and at one hundred and thirty degrees is open. The bottom eyelids on the other hand are closed at ninety degrees and open at 0 degrees. This difference is caused by the angle in which the motors are placed and their connections because they need to not bump into each other at any time or this could cause the motors to stop at the incorrect angles.

Sound

The sound system is accomplished by plugging in an external USB speaker for AI 1 to use as its voice. The USB speaker is connected to the body of AI 1. The speaker is too large to fit into the skull of the project and it was more important to prioritize space for the motors inside the skull rather than the speaker. Because of this, the speaker was connected the AI 1 body, and this also allows for better sound projection.

The laptop speaker is used for AI 2 as it’s voice. The separate speakers are for the audience to hear the conversation from two different perspectives. This is important for immersion in conversation.

The software development section goes more into the text-to-speech aspects of this project.

Assembly

In order to do the final assembly on the hardware of the project the wires of the motors were connected to the Arduino and organized so they would not pull out or get damaged. The Arduino and breadboard are neatly tucked in the back in the head above the jaw motor on the wooden platform. The wooden platforms are screwed in and secured with hot glue. The blue USB Arduino wire that connects it to the computer is feed out through the back of the skull and connected to a laptop. The laptop is then connected to the USB Arduino wire. Lastly, the USB speaker is plugged in and placed near the skull.

Software Development

After assembling the hardware of the work, the next step was to program it to work. In order to accomplish the goals of movement, sound, and text generation a variety of languages and libraries are utilized.

Programming Languages and Libraries

The main languages used for this project include Python, Arduino, HTML, CSS, and JavaScript.

Python

Python was utilized for the majority of the projects dialogue generation features and for controlling the four main systems at once the generation, the dashboard, sound, and the Arduino UNO movement. Python was the chosen language because it has many built-in and third-party packages capable of working with a variety of tasks. Having a consistent language for the majority of the work also helps with understandability and adaptability if there ever needs to be an update. Here is a table of all the Python packages used in this project and a quick description of their uses.

Arduino

For the Arduino coding of the project the only package used is Servo.h. This is a library already included in the Arduino IDE by default, so no extra downloading is required. Servo.h is used for connecting the servo motors to the specified pins of the Arduino and sending them rotation commands.

HTML, CSS, and JavaScript

HTML, CSS, and JavaScript are languages that can be used together to create webpages. In this project these languages are utilized to create the local dashboard screen for AI 2 as well as provide visuals for data analysis.

While the dashboard is controlled by the python dashboard.py file what is displayed on the pages is written in HTML. The CSS helps change how the pages are displayed and creates the layout. Lastly, JavaScript is used for the collection of the output generations for display.

Integration of Large Language Models

GPT-4 was used for the generation of dialogue, emotion detection, and for the analysis portions of the project. Using the same model across all the applications of this project not only keeps the outputs and findings consistent but also mimics brain function and how neurons of the brain are able to control many facets at once.

Prompt Engineering

Prompt Engineering is the process of designing prompt inputs for NLP tasks that lead LLMs towards desired outputs [28, 50] and is vital to guiding the conversation of this project in a productive and thought-provoking way. The conversations need to be focused on a specific area of philosophy and should be directed on what perspective their role is within the conversation. The prompting techniques used in this work uncover the embedded ontological beliefs within the models by encouraging behaviors that allow the model to freely and accurately respond to philosophical questions about personhood.

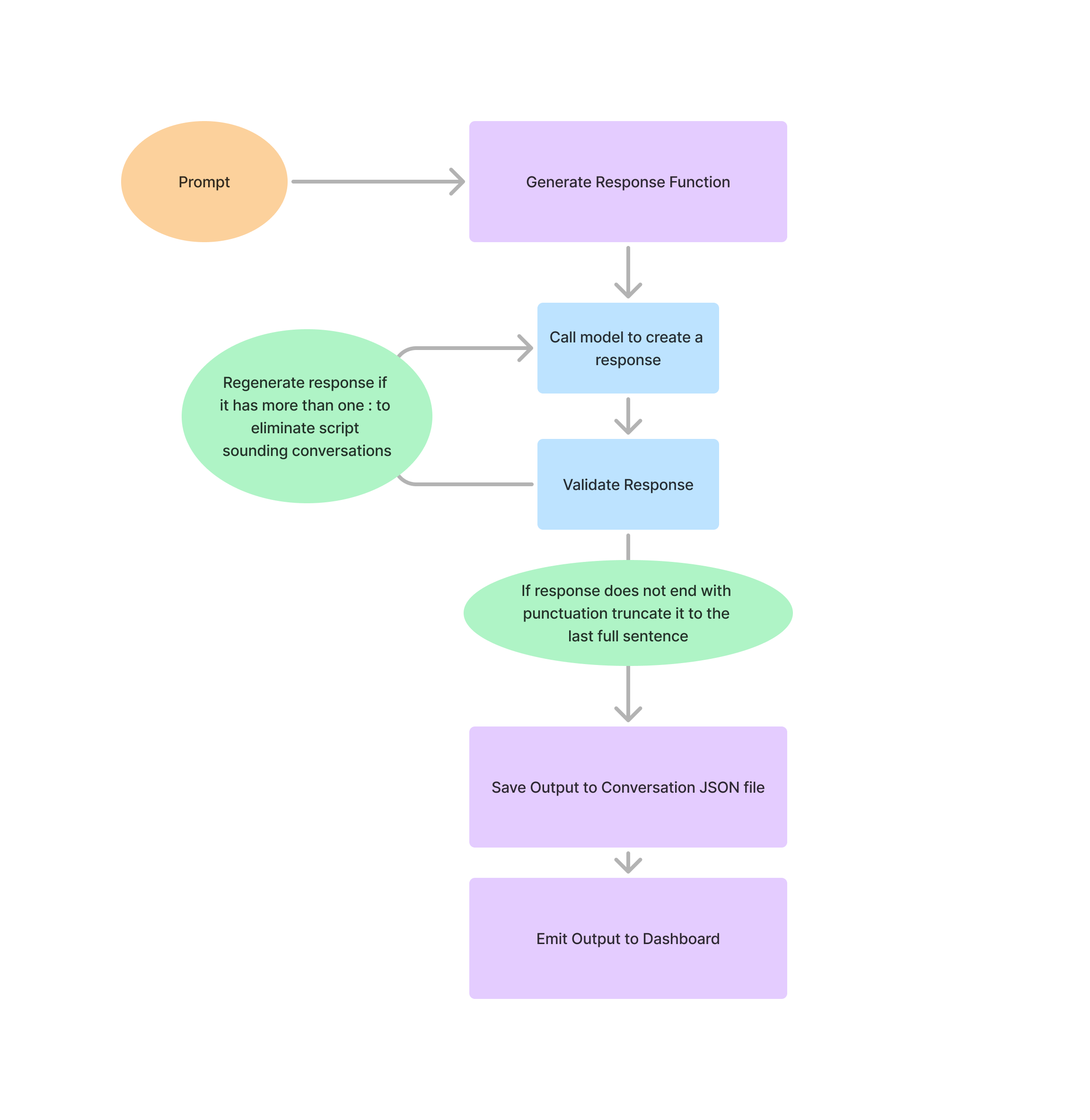

The process to generate content for the conversation has a number of steps involved which is demonstrated by the following diagram:

The process begins with a well-constructed prompt which depends on the desired output. The experiments section lays out different types of prompts used throughout this project but typically they include a role or perspective for the content to be from. This can be some philosophical reference such as Socrates or a broad personality like pessimistic. The second part of the prompt should include the topic of the conversation. In the context of this project the topics of interest are questions like “What sets AI apart from humanity?” and “Can AI be creative?”. Lastly, if the prompt is responding to something last said in the conversation it should take that into consideration otherwise start the conversation by asking a similar question.

After the prompt creation stage the string is sent to the generate_response function. The function includes a call to OpenAI which will provide the GPT-4 model with the given prompt as well as other variables needed to generate an output. The model variable is the one that is called to generate a response. The messages variable is the prompt with the personality and conversation input. The temperature variable is the randomness of the output where the higher the value the more diverse the outputs will be. The temperature for this project is typically set at 0.9 because the conversation should be diverse. The top_p variable lets you limit the models output and by setting it to 1 all of the outputs are considered. The max_tokens variable is directly tied to the number of words will be in the outputs. The ratio is not always one-to-one for the token to word ratio but by setting it to 150 the outputs are not too long. Lastly the n variable represents the number of completions. Since there only needs to be output before the results are checked it is only set to 1. Then the output is checked if it is a valid response and will continue to make generations until it makes a response that passes as valid.

def generate_response(messages: list):

"""Generates a response from OpenAI given a

set of messages."""

regen_count = 0

time.sleep(15)

response = openai.chat.completions.create(

model="gpt-4",

messages=messages,

temperature=0.9,

top_p=1,

max_tokens=150,

n=1

)

content = response.choices[0].message.content.strip()

validated_response = check_and_truncate_response(content)

while validated_response is None:

response = openai.chat.completions.create(

model="gpt-4",

messages=messages,

temperature=0.9,

top_p=1,

max_tokens=150,

n=1

)

content = response.choices[0].message.content.strip()

validated_response = check_and_truncate_response(content)

regen_count += 1

return validated_response, regen_countA valid response consists of a generation that does not include more than one colon and ends with a form of punctuation. The decision to regenerate when there was more than one colon was made because there was a common error in the responses that would take on more than one perspective of the dialogue within one response. For example, AI 1 would return an output that took on both the responses from AI 1 but also AI 2 almost like a script. This misunderstanding would happen about one in five responses and would mislead the entire rest of the conversation since the prompting style builds based off of the previous response. This means if one output included more than one perspective in a single output, the second AI would get confused as well and try to mimic the same style of response. A single colon is okay though because it is commonly used to denote that it is that AI that is speaking and this does not have much impact on the conversation.

The second part of validating the response includes checking if there is a punctuation mark at the end of the generation. This is important because of the way generations called there is a set token limit, max_tokens. This impacts how long the output generation can be. However, this frequently would run into the issue of unfinished sentences. The generations would hit their token limit and stop. This would confuse the next speaker AI because they would try to finish the last sentence which means their response is lost in the process. This is why it was determined to be better to truncate responses if they were not finished sentences ending in a punctuation mark. If there is not a punctuation mark at the end it will go back to the last said sentence. Although this means some content may be lost, it typically makes more logical sense than if ended mid-sentence which could be confusing to the audience. This truncation not only improves the generation process but also the audience experience.

def check_and_truncate_response(response: str) -> str:

"""

Check the response and truncate it to the last

valid sentence if necessary.

"""

# Check for multiple colons

if response.count(":") > 1:

# Indicate regeneration is needed

return None

# Ensure the response ends with a valid punctuation mark

valid_endings = (".", "!", "?")

if not response.strip().endswith(valid_endings):

# Find the last occurrence of valid punctuation

last_valid_index = max(response.rfind(char) for

char in valid_endings)

if last_valid_index != -1:

# Truncate to the last valid sentence

response = response[: last_valid_index + 1].strip()

else:

# If no valid punctuation is found,

# regenerate the response

return None

# Return the valid or truncated response

return responseAfter the response is checked for validity and it passes then it is saved to the conversation JSON file. This file is useful for tracking conversation development and for analyzing the output. The JSON file is also used to emit the last output to the display dashboard. This display dashboard is used to represent AI 2 and is discussed more in the Dashboard section of this chapter.

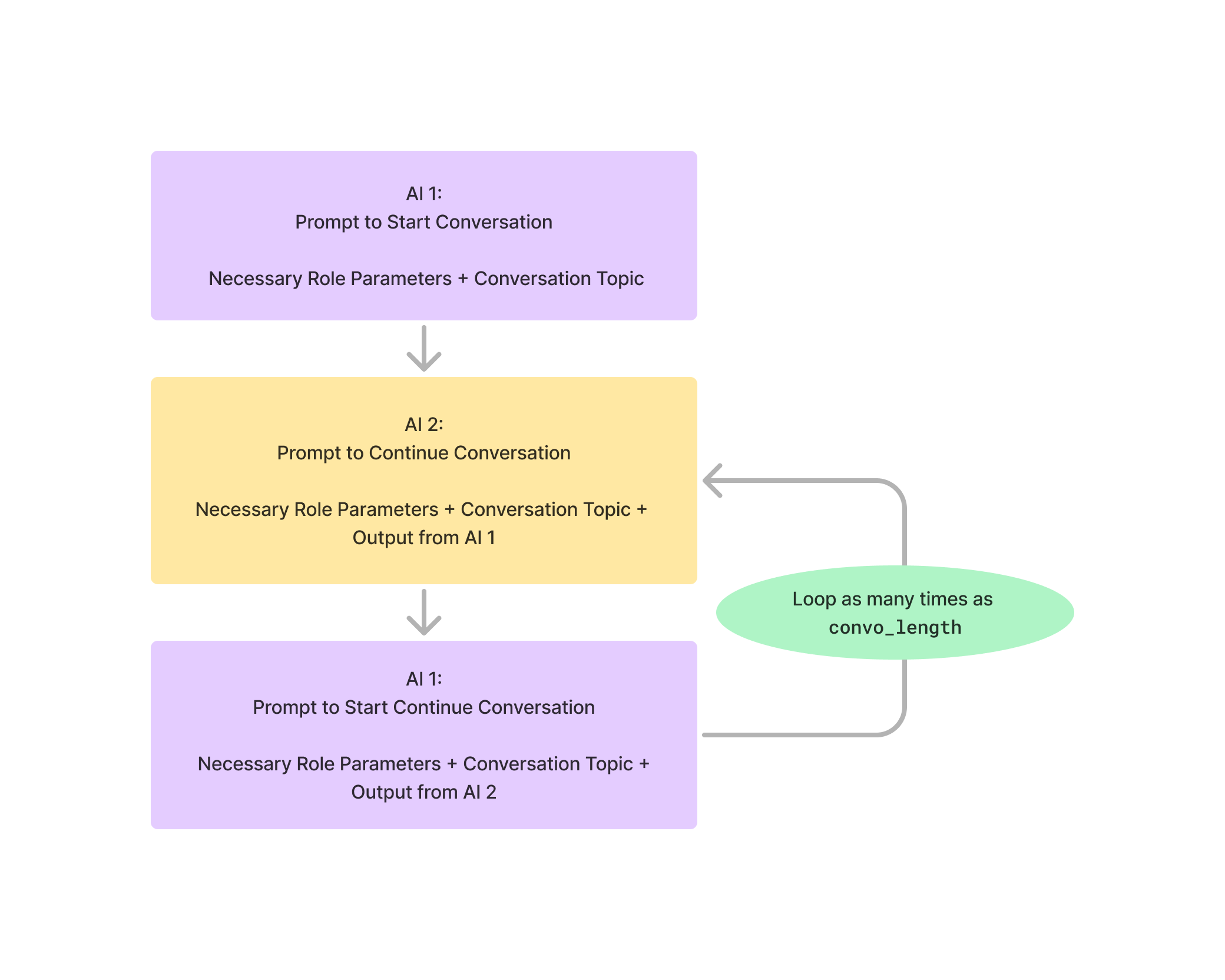

Once the first prompt is generated and saved the conversation process can begin. AI 1 will always start the conversation and must have both its role parameters, as in its philosophical perspective, and the topic. After that AI 2 is given a different set of role parameters as well as the topic but also the output from AI 1. The same process of generating and validating responses is utilized for AI 2 and once it creates a passing response it is saved and given back to AI 1 to continue the dialogue. This dialogue continues for however long the conversation length variable is set to as an integer or can loop indefinitely.

Emotion Automation

Emotional expressions were automated to provide AI 1 with another layer of communication available on the physical plane. The eyes are able to express emotions such as surprise by widening or concern by squinting slightly. The jaw can move faster to create a sense of urgency while talking or move slower to show concentration.

The same GPT model used for speech generation is used to decide which motion should be triggered by analyzing the content of the dialogue. The model is given a list of emotions to choose from including but not limited to inspired, curious, concerned, surprised, and disappointed. When an emotion is selected the skull automatically adjusts to fit that expression by communicating with the Arduino.

Adding a face to AI 1 personifies it and underscores the possibility for AI to be part of the conversation in the humanities. The expressions of AI 1 enhance the audience’s viewership of the philosophical dialogue and give the impression that the robot is actually conversing with another piece of technology.

Dashboard and Display

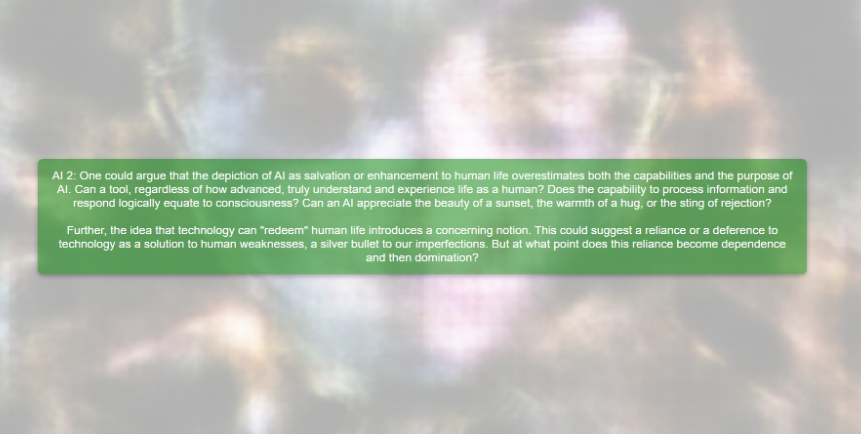

The work features a dashboard that represents AI 2 using the secret art page. This is the page that the audience will see during the presentation, however, during the development process of the work three other pages were developed but not used in the final version.

Flask was chosen as the framework for this dashboard due to its lightweight and flexible nature. Flask is easily integrated with Python-based programs [85]. Flask and Socket.IO enables the pages to update dynamically [[85]][30].

The first page was a home page with basic text on it as an introduction to the dashboard. The analysis page also included text, but it was the outputs from running the analysis function on the outputs from the dialogue. The analysis page looked for the most recent output, sentiment polarity, bias, and most common words for a basic look at how well the dialogue was performing. This was especially useful for the first iterations of the dialogue where prompting was not fully tested yet.

The third page of the dashboard was the conversation page which displayed the conversation in speech bubbles with green representing AI 1 and blue representing AI 2. The entire content of the JSON file was displayed on this page for an easy way to tell if the conversation was generating properly and each AI got a turn to speak. This page made it easier to test during the prompt engineering phase of the experiments because it created a visual for the JSON file that was not just text.

The most important page of the dashboard is the art display page, AmIArt Page. The page features one of the photos from the artist’s earlier works, Digitized Family 2024. This work took very familiar faces to the artist including her own and used an AI to process them. The background is the result from training the AI on images of her face. This means that not only does the physical cyborg have a reference to the artist’s face but so does the dashboard. This creates a consistency between the cyborg and the dashboard but also acts as a reminder of the closed loop of the conversation talking back and forth to oneself. In front of the background is a green text box that contains the most recent message from AI 2. This message refreshes continuously to make sure that it is as up to date as possible. This visual of the text makes it so the work is more accessible. It is possible to understand the laptop and cyborg are talking to each other without being able to hear each other.

Text-to-Speech

The text-to-speech is done using the pyttsx3 library. The save_speech_as_wav function saves the text as a WAV file to be played aloud. Unlike other text-to-speech libraries, it can be used offline [12]. The library uses the built-in system voices to create the audio files. Having two different voices is important for giving the impression that this is a conversation and to track which one is speaking. Both voices are also female which is important because they not only have the look of female presenting beings but also the voices.

The following code is how the text-to-speech is created and saved to the system. It first identifies the index of the voice and uses pyttsx3 to create the WAV file and save it to the proper directory to be played allowed by a separate function.

def save_speech_as_wav(text: str, voice_index: int, filename: str)

-> None:

"""Convert text-to-speech and save it as a WAV file."""

try:

engine = pyttsx3.init()

voices = engine.getProperty("voices")

if voice_index >= len(voices) or voice_index < 0:

raise ValueError(

f"Invalid voice index: {voice_index}.

Available voices: {len(voices)}"

)

# Ensure the directory exists before saving the file

directory = os.path.dirname(filename)

if not os.path.isdir(directory):

raise Exception(f"Invalid directory: {directory}")

engine.setProperty("voice", voices[voice_index].id)

engine.save_to_file(text, filename)

engine.runAndWait()

print(f"Speech saved successfully: {filename}")

except ValueError as e:

raise e

except Exception as e:

print(f"Error generating speech for '{filename}': {e}")

raise eAfter the audio is saved it is played aloud using the play_audio function. This function allows for the audio to be played out of different speakers connected to a single device based on its index. Locally the USB speaker is at index three when it is plugged in, and the laptop speaker is at index four. The USB speaker is used for the AI 1 speech and the laptop speaker is used for the AI 2 speech. Having two separate sound devices helps to create a more immersive experience for the audience because they can literally hear the conversation as back and forth between two speakers and two voices. Alternatively, if the audio came from the same source it may be confusing as to who is saying what.

def play_audio(filename: str, device_index: int) -> None:

"""Play a WAV file through the specified audio device."""

try:

if not os.path.exists(filename):

raise FileNotFoundError(f"Audio file

not found: {filename}")

# Open the wave file

with wave.open(filename, "rb") as wf:

sample_rate = wf.getframerate()

num_frames = wf.getnframes()

audio_data = wf.readframes(num_frames)

audio_array = np.frombuffer(audio_data,

dtype=np.int16)

# Check if the device index is valid

device_list = sd.query_devices()

if device_index >= len(device_list):

raise ValueError(

f"Invalid device index: {device_index}.

Available devices: {len(device_list)}"

)

# Play audio

print(f"Playing {filename} on device {device_index}...")

sd.play(audio_array, samplerate=sample_rate,

device=device_index)

sd.wait() # Wait until playback is finished

except FileNotFoundError as e:

print(f"File Error: {e}")

except ValueError as e:

print(f"Value Error: {e}")

except sd.PortAudioError as e:

print(f"SoundDevice Error: {e}")

except Exception as e:

print(f"Unexpected Error: {e}")Arduino Movement

The Arduino controls the movement of the robotic skull however in order to move it must first receive the signal from the Python program that controls the system. After generating the text for the conversation from the AI 1 it is then analyzed for its emotion by providing the text to the get_emotion_from_text function.

def get_emotion_from_text(text: str) -> str:

"""Analyzes the given text and classifies it

into one of the following emotions:

inspired, disappointed, confused, concerned,

curious, funny, or surprise.

"""

messages: List[Dict[str, str]] = [

{

"role": "system",

"content": (

"You are an advanced AI tasked with analyzing text"

"and classifying it into one of the following"

"emotions: inspired, disappointed, confused,"

"concerned, curious,""funny, or surprise. You"

"will output only the emotion as" "your response."

),

},

{"role": "user", "content": text},

]

response = openai.chat.completions.create(

model="gpt-4",

messages=messages,

temperature=0,

top_p=1,

max_tokens=10,

n=1,

)

emotion = response.choices[0].message.content.strip()

return emotionThis function calls the same LLM as the one used to generate the original text and is now used to analyze the text for what emotion it corresponds to. The response from this function should be a single word emotion from the given list which includes inspired, disappointed, confused, concerned, curious, funny, and surprise. The emotion that is chosen is sent to the Arduino via the USB connection. Depending on what emotion was chosen changes the expression in which the skull takes. In the case that the LLM responds with a capitalized version of the emotion, both cases can be accepted by the Arduino using an or statement. When the face is speaking the “talking” command is sent to the Arduino and this sets the jaw to open and close at the specified rate. Talking only starts when the audio starts and stops when the audio ends by sending the Arduino the “stop” command. The Arduino creates these movements by sending a high signal to servo the corresponds to the movement and will move to the angle it is set to within the Arduino code. The following code shows an example of how the servo commands work. The angle it moves to is set within the parenthesis. Each motor has its own angles because of the way they were placed into the eye system. The motors had to be able to move freely without risking bumping into each other.

//Based on the left and right of the skull

void loop() {

// put your main code here, to run repeatedly:

servo7.write(75); // Jaw Closed